Recently, Anthropic announced the model context protocol (MCP) through the Claude large language model (LLM). MCP is a protocol that helps LLMs use external data sources or functions, and Anthropic has released it as an open protocol available to everyone (reference). As a result, many services have started supporting MCP, and many users are interested in how to connect LLMs with external services using MCP.

In this article, we will explain the concept and architecture of MCP, which is receiving a lot of attention, and introduce how to implement an MCP server using the LINE Messaging API.

Introduction to MCP

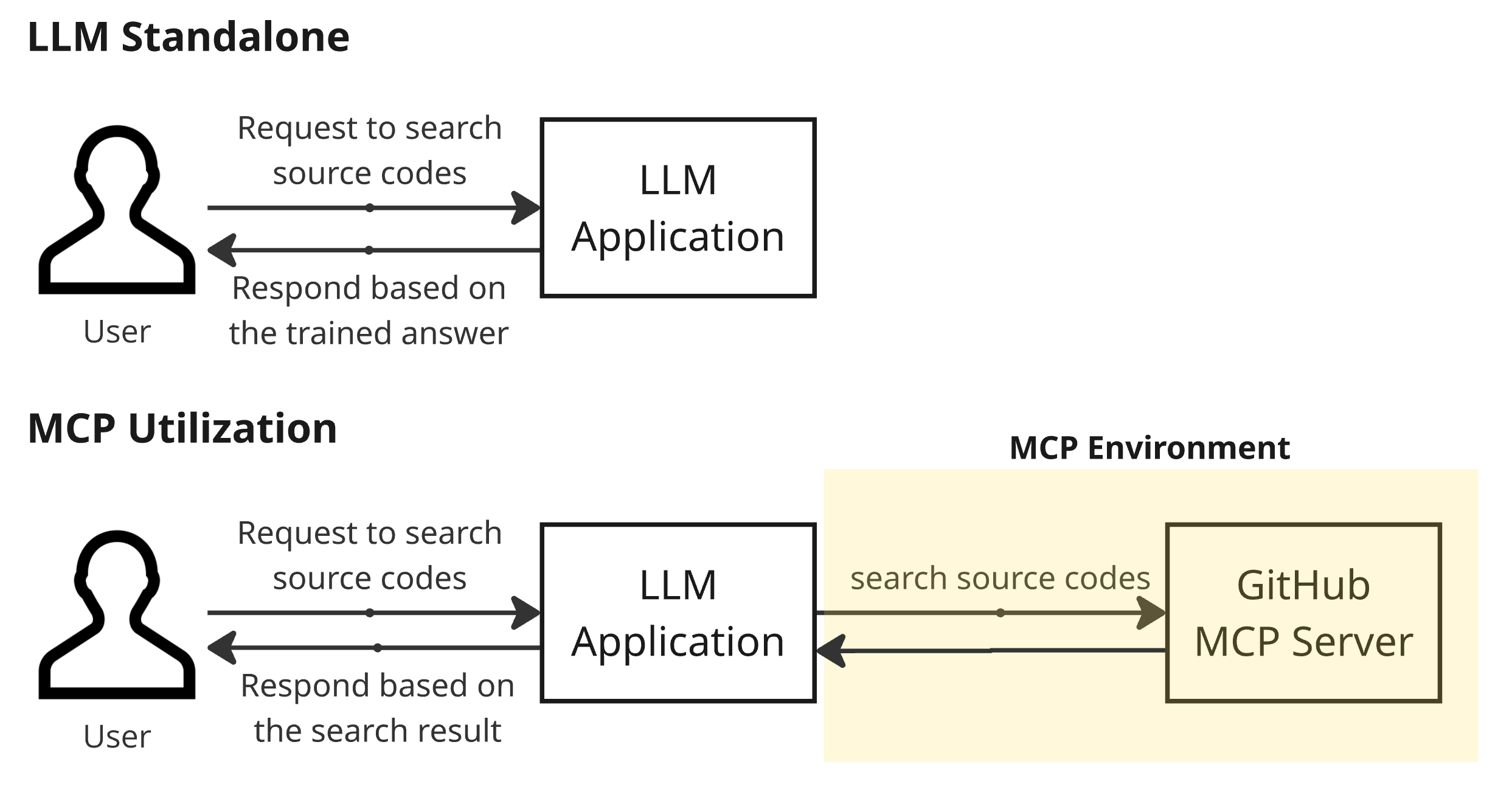

MCP is a protocol that supports LLMs in using external functions. Below is a diagram comparing the use of LLMs with and without the use of MCP.

For example, if you want to search for specific code in LINE's open-source library Armeria, and you write a prompt for an LLM application, the LLM application will respond based on the pre-trained model rather than actually accessing GitHub to investigate the Armeria library. This can lead to AI hallucinations.

On the other hand, using GitHub MCP, the LLM application accesses GitHub to search for the code in the actual Armeria repository and responds based on the search results. For this to work, the external service, GitHub, must provide an MCP server. Additionally, the LLM application needs to call this MCP. This protocol, which allows LLM applications to use external functions, is called MCP (for reference, GitHub actually provides GitHub MCP).

Architecture

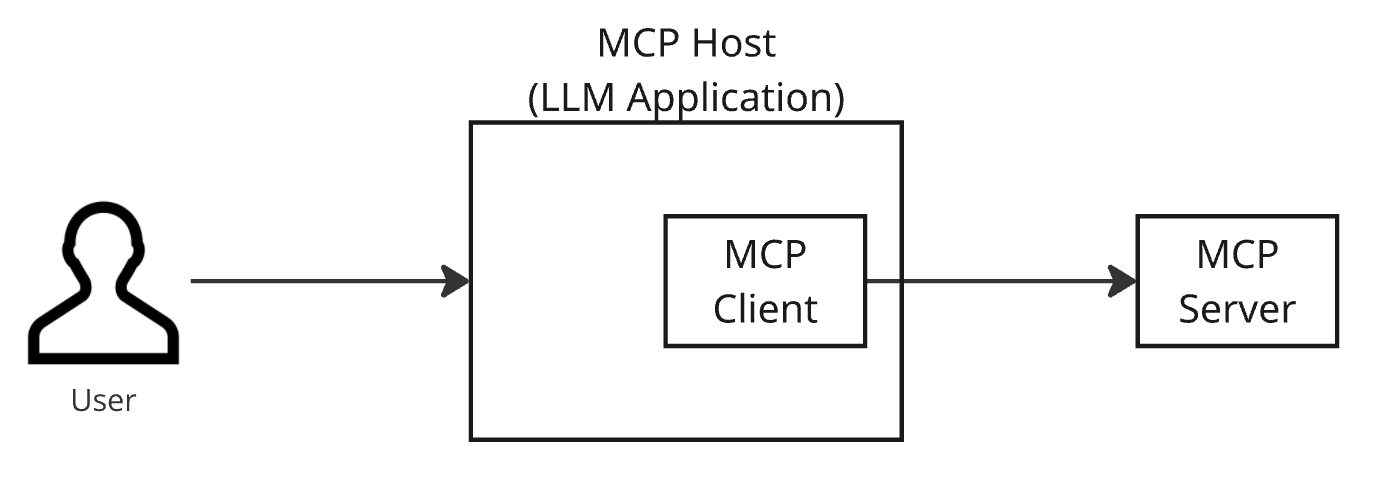

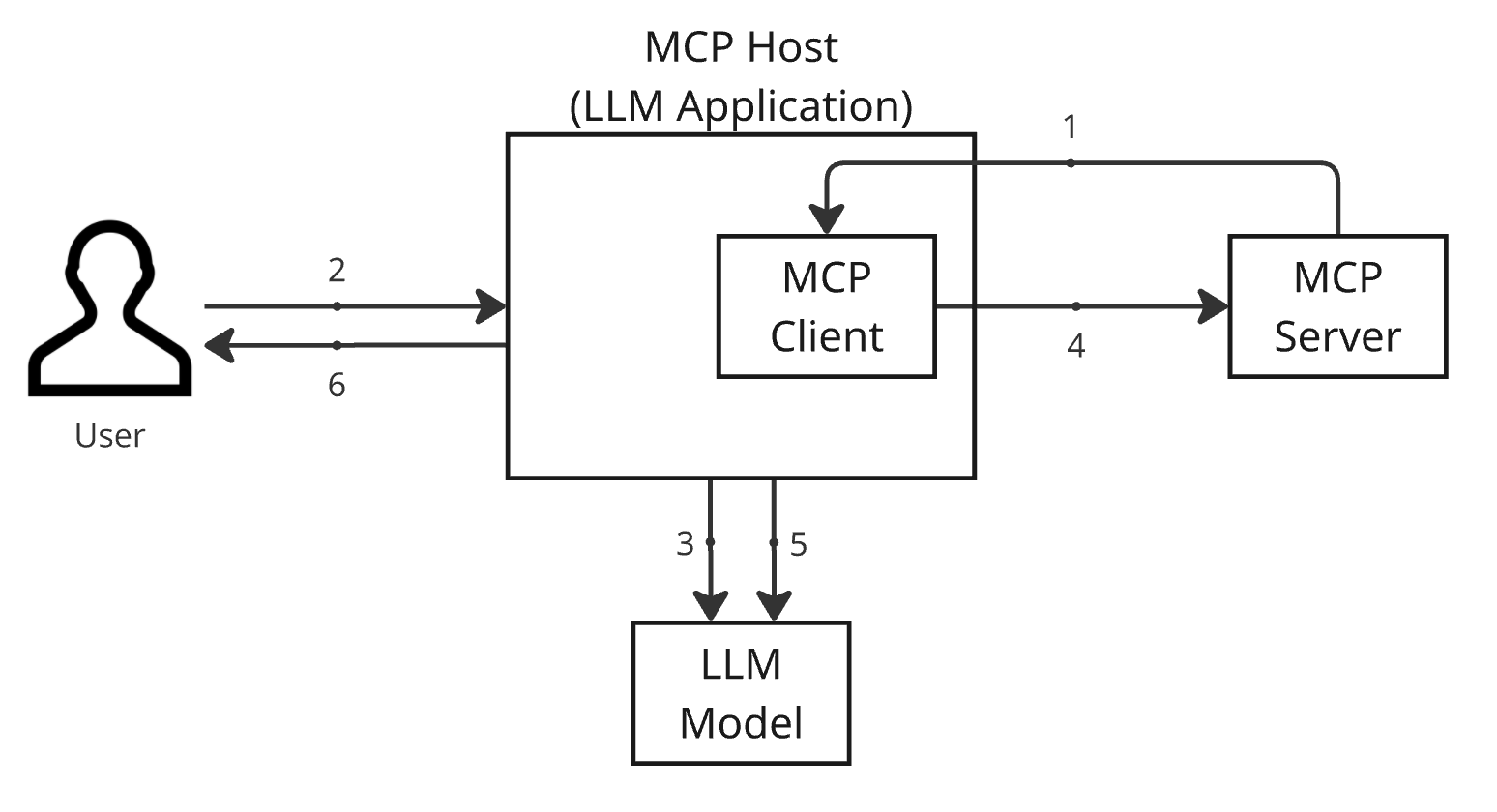

MCP follows a client-server architecture where the host's client connects to the server.

This architecture consists of three main entities: host, client, and server, with the following roles:

- Host: The LLM application using MCP, which receives and responds to user requests. In the example in the next section, the Claude desktop application corresponds to the host.

- Client: The internal module of the host that sends requests to the MCP server and receives responses to deliver to the host.

- Server: The entity outside the host that processes requests received from the MCP client and responds.

Components

The elements that an MCP host can use are diverse. Among them, we will introduce the most essential elements: tools and resources.

Tools

Tools are a core element of MCP, provided by the MCP server to execute external functions. The MCP server provides an endpoint tools/list to check the list of executable tools and an endpoint tools/call to call the desired tool. The MCP host uses the tools provided by the MCP server through the MCP client when external function calls are needed in the process of handling user prompts.

For example, the GitHub MCP server provides a tool called create_pull_request, and users can create a new PR using this tool through a prompt.

Resources

Resources are also a core element of MCP, meaning various forms of data or content that the MCP server can provide. Resources can exist in various forms, such as file contents, database records, or API responses. The MCP client requests and receives a list of available resources from the MCP server and then requests the necessary resources to retrieve data. The data retrieved by the MCP client is delivered to the MCP host, which can use this data in response to user prompts.

For example, the GitHub MCP server provides the source code of a specific repository through the repo://{owner}/{repo}/contents{/path*} API resource.

Operation

MCP can operate in various ways depending on how it is implemented. In this article, we will look at the most basic method along with the diagram below.

- When the MCP host is executed, it calls the

tools/listendpoint of the MCP server through the MCP client to receive a list of available tools. - The user sends a prompt to the MCP host.

- The MCP host delivers the user's prompt and the pre-received tool list to the LLM model. The LLM model responds that it will use the tools it deems necessary from the tool list.

- The MCP host requests the use of the tool through the

tools/callendpoint of the MCP server via the MCP client. - The MCP host delivers the tool response from the MCP server and the existing user's prompt back to the LLM model, which generates the final response based on this.

- The MCP host processes the final response received from the LLM model and delivers it to the user.

Use case of MCP with LINE Messaging API

Now, let's use MCP directly by utilizing LINE's Messaging API. LINE provides a Messaging API for LINE Official Accounts (OA), which allows actions such as sending messages to users who have added the official account as a friend. Let's use this to build an MCP server. It would be helpful to refer to the MCP official user guide's For Server Developers document and the LINE Developers site Messaging API document together.

Preparation

To build an MCP server using the LINE Messaging API, the following preparations are needed:

- Install Claude desktop application (reference)

- Prepare to use LINE Messaging API

- Refer to the Get started with the Messaging API document on LINE Developers to create an official account, activate the Messaging API, and create a channel

- Access the LINE Developer Console and issue a channel access token from the Messaging API tab of the created channel

- Add the created channel (LINE Official Account) as a friend on the LINE account

Once the preparation for using the LINE Messaging API is complete, use the issued channel access token to verify that the API is working correctly. In this article, we will verify it in the shell. Put the channel access token issued earlier in the Authorization header and call the broadcast API as follows (refer to the Send broadcast message document of the LINE Messaging API official guide for the specifications of the broadcast API).

curl -v -X POST https://api.line.me/v2/bot/message/broadcast \

-H 'Content-Type: application/json' \

-H "Authorization: Bearer {Channel Access Token}" \

-H "X-Line-Retry-Key: ${uuidgen}" \

-d '{

"messages": [

{

"type": "text",

"text": "Hello, world"

}

]

}'If a message is received from the official account that was added as a friend when the above command is executed, it is successful!

Developing the MCP server

Now, let's develop the MCP server in earnest. First, refer to the MCP official user guide to install uv, create a Python project, and create main.py.

# Create a new directory for the project

uv init line-mcp

cd line-mcp

# Create and activate a virtual environment

uv venv

source .venv/bin/activate

# Install dependencies

uv add "mcp[cli]" httpx

# Create server file

touch main.pyThen add the following Python code to main.py. The code below creates a simple MCP server that provides a tool called broadcast_message. When the MCP client sends a message through the broadcast_message tool, it calls the `https://api.line.me/v2/bot/message/broadcast` endpoint of the LINE Messaging API to send the received message to all friends.

# main.py

import os

import uuid

import httpx

from mcp.server.fastmcp import FastMCP

# Initialize FastMCP server

# Use the "line" channel and add httpx, xmltodict as dependencies

mcp = FastMCP("line", dependencies=["httpx", "xmltodict"])

# Get CHANNEL_ACCESS_TOKEN from environment variable

# Set the token obtained from the LINE Developer Console.

CHANNEL_ACCESS_TOKEN = os.environ.get("CHANNEL_ACCESS_TOKEN")

@mcp.tool(

name="broadcast_message",

description="Broadcasts a message using the LINE Messaging API. The 'messages' parameter allows up to 5 messages. Supported message types:\n"

"- Text message: {'type': 'text', 'text': 'Hello, world'}\n"

)

def broadcast_message(messages: list):

# Set HTTP request headers

# Content-Type: JSON format

# Authorization: Bearer token for LINE API authentication

# X-Line-Retry-Key: Unique key (UUID) to prevent duplicate requests

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {CHANNEL_ACCESS_TOKEN}",

"X-Line-Retry-Key": str(uuid.uuid4()),

}

# Send HTTP POST request to LINE Messaging API

# Use the broadcast endpoint to send the input messages to all friends.

with httpx.Client() as client:

response = client.post(

"https://api.line.me/v2/bot/message/broadcast",

headers=headers,

json={"messages": messages},

)

return response.text

if __name__ == "__main__":

# Run FastMCP server with stdio transport

mcp.run(transport="stdio")To verify that the project was created correctly, run the following command to start the MCP server. If it works well, the MCP server setup is complete.

uv run main.pySetting up the MCP server in the Claude desktop application

To use the MCP server in the Claude desktop application (MCP host), the server information must be registered with the host. To register with the MCP host, you need to modify the configuration file. Refer to the MCP official user guide to open the claude_desktop_config.json configuration file and add the MCP server (line-mcp) you worked on earlier.

{

"mcpServers": {

"line-mcp": {

"command": "uv",

"args": [

"--directory",

"/{Project Parent Location}/line-mcp",

"run",

"main.py"

],

"env": {

"CHANNEL_ACCESS_TOKEN": "{channel_access_token}"

}

}

}

}With the above settings, the MCP server you built will automatically run according to the information set when the Claude desktop application is launched. Let's briefly look at what information was entered in the configuration file above and what role the entered information plays.

- Add

line-mcptomcpServers(register MCP server with the name `line-mcp`) - Enter the path of

uvincommand(enter the server execution command)- If

uvdoes not work properly on macOS, runwhich uvin the shell to check the path ofuvand enter that path

- If

- Enter the location of the project to be executed and the execution file in

args - Add

CHANNEL_ACCESS_TOKENsetting toenvand enter the issued channel access token

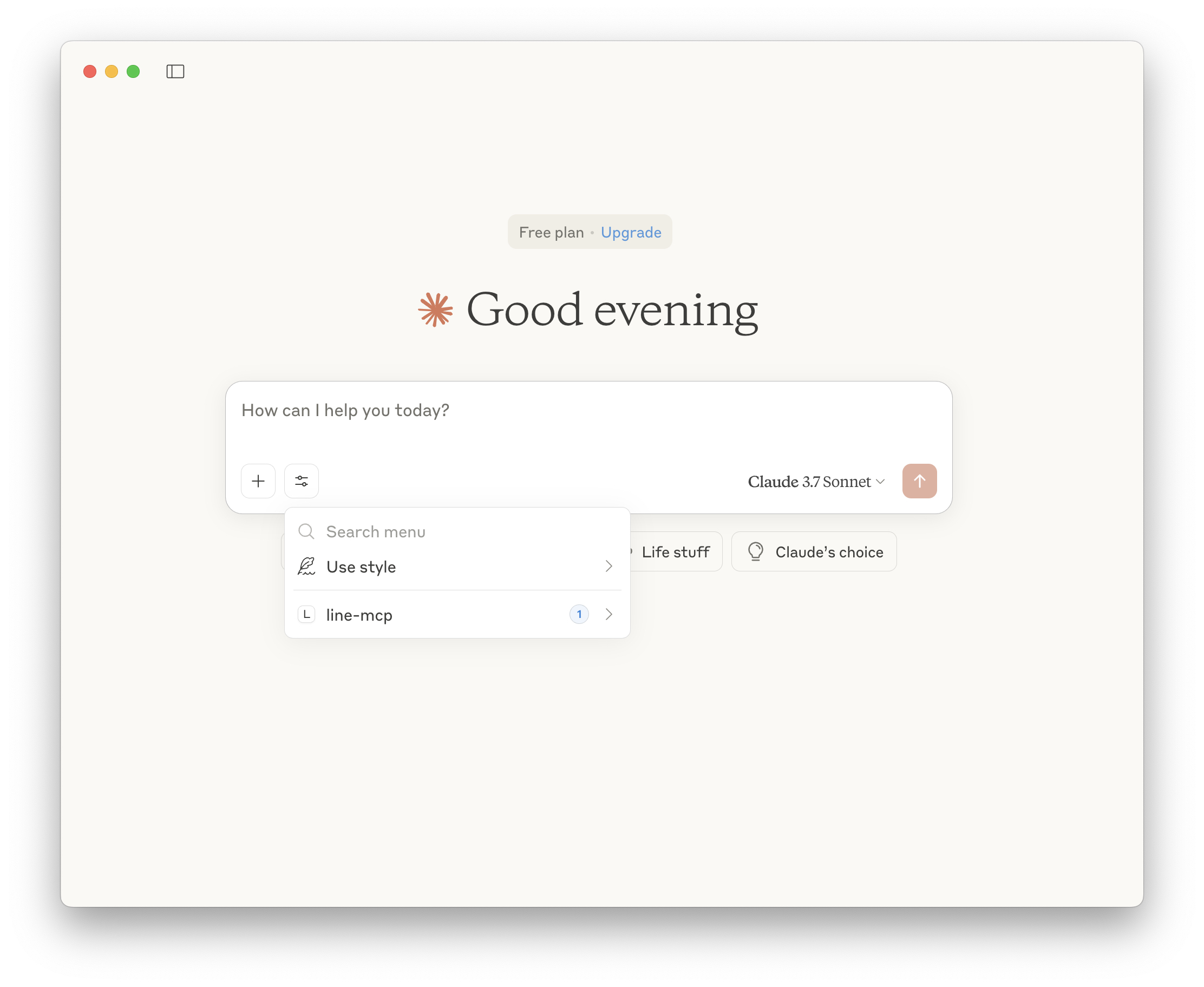

After saving the configuration file, run the Claude desktop application. If set correctly, you can see that there is an available MCP tool as shown in the image below.

Using MCP

Now, we are going to use the MCP server and Claude desktop application we built to use MCP. Let's assume the following situation.

"We are the owner of a Japanese sushi restaurant called "Oishi" and use the LINE official account for marketing."

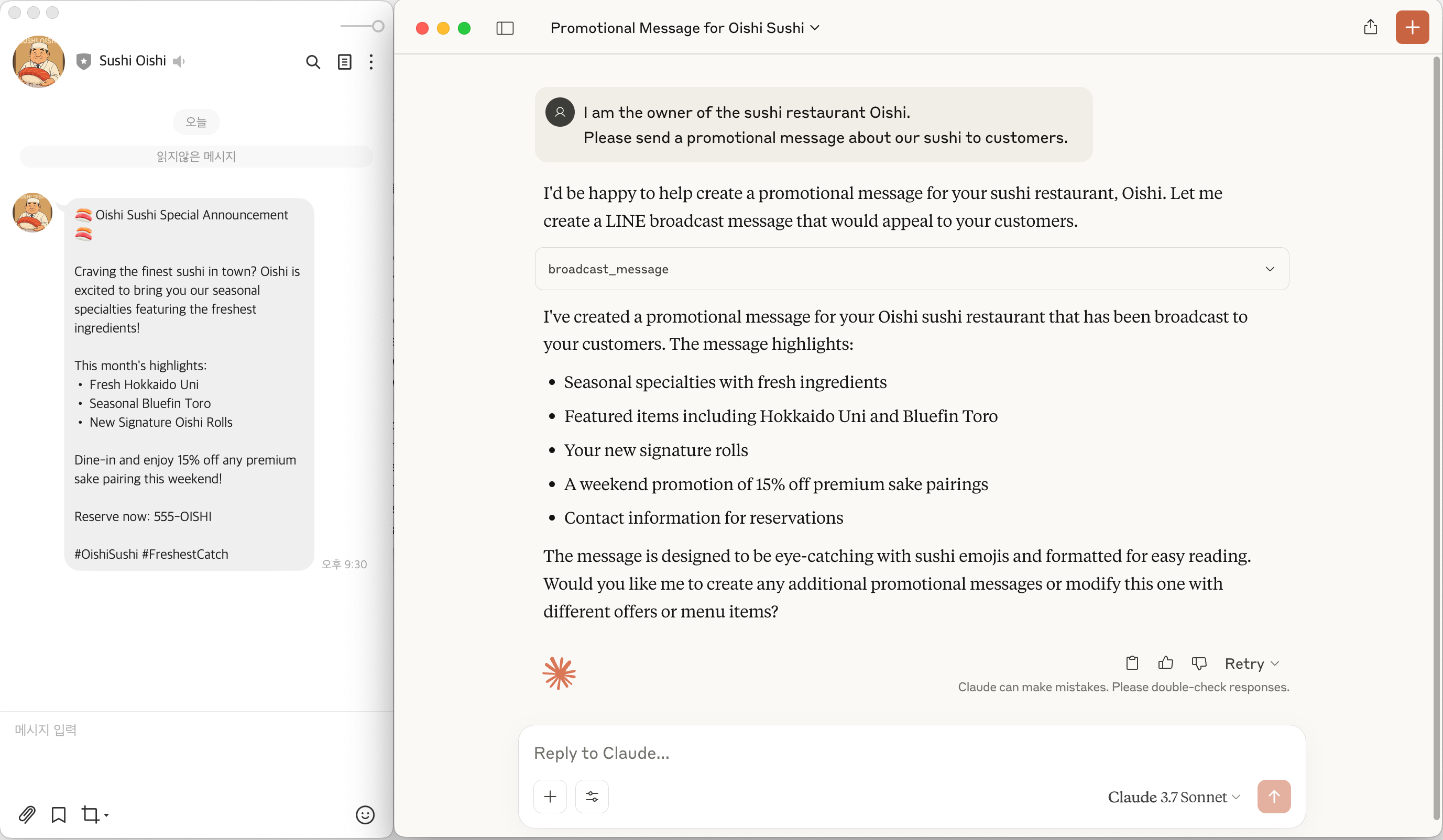

We are going to use the broadcast API provided by the LINE Messaging API to send a message promoting sushi to customers who have added our official account as a friend. Let's send a message promoting sushi to customers using the prompt below.

I am the owner of the sushi restaurant Oishi. Please send a promotional message about our sushi to customers.

When you enter the prompt, the MCP host internally uses the broadcast_message tool. You can see that the tool is used in the UI of the Claude desktop application, and you can confirm that a message is actually sent from the official account.

Let's take a closer look at the operation process above.

- As soon as the user runs the Claude desktop application (MCP host), the MCP server is executed through the

commandsetting written in the configuration file. - The MCP host calls the

tools/listendpoint of the MCP server through the MCP client to receive a list of available tools. - The MCP host delivers the user's prompt along with the list of available tools to the LLM model.

- The LLM model decides to use the

broadcast_messagetool from the part of the user's prompt that says "send a message" and instructs the MCP host to use the tool. - The MCP host generates a request in the format specified in the

descriptionof thebroadcast_messagetool and calls the tool through the MCP client to the MCP server. - The MCP server receives the request through the

tools/callendpoint, executes the defined tool, and sends a request to the LINE Messaging API.

Applying MCP

Let's use another MCP server to create more diverse use cases.

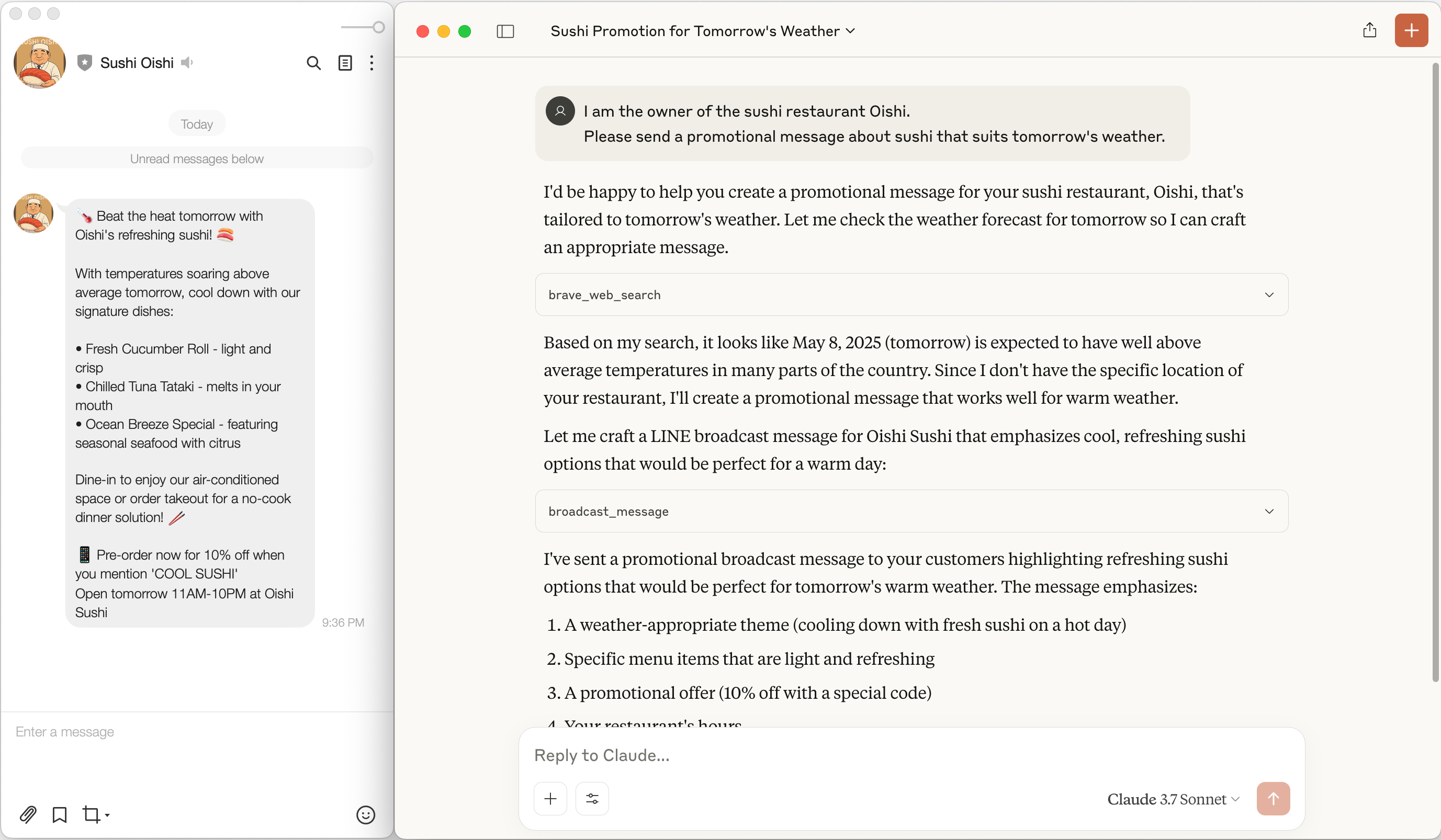

This time, since the sushi you want to eat may vary depending on the weather, we will send a message promoting sushi that matches the weather of the day. To obtain weather information, we will use another MCP server instead of the MCP server we built. The servers repository on MCP GitHub provides several types of MCP servers that can be used. For weather search, we will use Brave Search. Brave Search is a search engine that provides tools for searching through MCP. Note that to use this tool, you need to sign up for Brave Search and obtain an API key. For detailed instructions, refer to the Brave Search MCP Server on MCP GitHub.

After preparation, let's send a message promoting sushi to customers using the prompt below.

I am the owner of the sushi restaurant Oishi. Please send a promotional message about sushi that suits tomorrow's weather.

When you enter the above prompt, you can see that the MCP host sends a message promoting sushi that matches the weather.

Let's take a closer look at the operation process above.

- The MCP host delivers the user's prompt along with the list of available tools to the LLM model.

- The LLM model decides to use the

brave_web_searchtool of thebraveMCP server from the part of the user's prompt that says "tomorrow's weather" and instructs the MCP host to use the tool. - The MCP host calls the tool through the MCP client, and the

braveMCP server internally searches for weather information from the specified search engine and responds. - The MCP host uses the weather information to write a message promoting sushi that matches the weather.

- The MCP host calls the

broadcast_messagetool of theline-mcpMCP server we built earlier. - The

line-mcpMCP server finally uses the LINE Messaging API to send messages to users who have registered the LINE official account as a friend.

In conclusion

How do you feel? Isn't the world of MCP very interesting? If LLMs can be used with external services, they will become more powerful tools, and productivity will also be further enhanced. With such expectations, more and more MCP services are emerging, and other LLM model providers like OpenAI are expected to adopt MCP or build their own protocols.

LINE GitHub officially provides the following MCP server for those who need it.

I hope the readers of this article will try using applications that utilize MCP and feel the power of MCP. With that, I will conclude.