Hello. We are Dahee Eo from the Enablement Engineering team and Ki Cheol Cheon from the Service Reliability team, both responsible for site reliability engineering (SRE) tasks.

Our two teams belong to the Service Engineering department and engage in technical activities to enhance the quality and ensure the availability of services provided by the LINE app. More specifically, we are responsible for the SRE of LINE app services such as messaging services and media platforms. To achieve this, we identify the technical elements required for service launches and events, provide appropriate solutions, and offer technical support and automation to eliminate uncertainties and reduce repetitive tasks, allowing the development organization to focus more on development and operations. Additionally, we strive to improve service reliability by making efforts in various areas such as demand forecasting, performance improvement, observability enhancement, and incident response.

Based on these experiences, we plan to publish a series of three articles on the topic of "adopting SLI/SLO for improving reliability." This article serves as the beginning, lightly explaining what service level objective (SLO) and service level indicator (SLI) are and why they are needed for those who are hearing about them for the first time.

The role of SRE

SRE performs all engineering tasks necessary to provide "stability" and "reliability" of services to customers. To be more specific, it involves writing code for performance improvement and automation, system monitoring, and incident response, aiming to maintain service stability while accommodating changes as much as possible to provide a highly reliable service.

The term "reliability" here is somewhat abstract. It can be interpreted as any positive word related to trust, as shown below.

- Providing fast service.

- Providing stable service.

- Providing secure service.

This ultimately means providing a service that users can trust and use. In other words, reliability is determined not by the monitoring results of the service provider but by the users.

Therefore, SRE must be able to express how users perceive the service based on data. For example, instead of qualitative expressions like "the service is slow" or "the service is unstable", it should be expressed quantitatively, such as "the response speed of the message transmission API is slowing down to over 1 second, causing inconvenience to users." For this, criteria, grades, and case-specific plans are needed.

This is where the concepts of SLI, SLO, and service level agreement (SLA) come in. The LINE app has also introduced SLI and SLO to provide more reliable services to users.

How is reliability measured?

As briefly mentioned above, how can reliability be measured quantitatively?

Let's explain with an example of a video service. The most important aspect for users when using the service is that the video starts without long delays and plays smoothly without interruptions. Here, the latency, which is the time taken for the video to start, becomes the SLI, or the indicator. The target value of this indicator is the SLO. Additionally, in the case of subscription services, since it is a contract between the user and the company, the criteria related to such indicators are specified, and compensation for breaching these criteria is also specified. This is the SLA.

The example we used for our explanation is a simplified and straightforward one so that anyone can more easily understand, but let's take a closer look at each concept.

User journey

To understand SLI, SLO, and SLA, we first need to look at the concept of the user journey. The user journey is defined as the process or flow that a user goes through when using a service. In the video service example mentioned earlier, "the process a user goes through to play a video on the service" is called the user journey. In messaging services, examples would be "the flow a user goes through to send a message" or "the process a user goes through to add a friend".

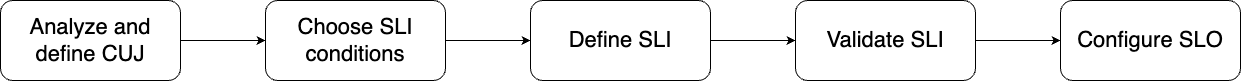

The journey for key functions and services within the user journey is called the critical user journey (CUJ). Introducing SLI and SLO starts with defining the CUJ for the main functions of the service and identifying the APIs used for each user journey.

SLI, SLO, SLA

Below is a table summarizing the concepts of SLI, SLO, and SLA.

| Category | service level indicator (SLI) | service level objective (SLO) | service level agreement (SLA) |

|---|---|---|---|

| Description |

|

|

|

| Example |

|

|

|

More detailed definitions of SLI/SLO examples are as follows. The text color indicates event, success criterion, where and how (API and measurement method), and measurement window. Please note that these are general examples as the CUJ has not been specified.

<Example 1. Latency-based SLI/SLO>

| SLI Type |

|

|---|---|

| SLI Specification |

|

| SLI Implementations |

|

| SLO |

|

<Example 2. Availability-based SLI/SLO >

| SLI Type |

|

|---|---|

| SLI Specification |

|

| SLI Implementations |

|

| SLO |

|

Below is a summary of the flow of measuring reliability based on SLI/SLO.

The principles for defining and measuring SLI/SLO are as follows.

- View from the user's perspective: The important thing is not what values can be measured, but finding out what users consider important.

- Keep it as simple as possible: If SLI is aggregated in a complex manner, it may not clearly reflect the system's performance status, so it should be as simple as possible.

- Set as few SLOs as possible: It is important to select the minimum number of SLOs that can accurately identify the characteristics of the system.

- Set goals: Set expectations, but avoid setting excessive goals like 100%.

- Continuous development and improvement: It is impossible to set it perfectly from the beginning, so it must be continuously checked and improved.

Error budget

Once you have measured SLI and defined SLO to set goals, you need to manage risks and errors in case these goals are not met.

This is where the concept of "error budget" comes in. An error budget is a value that defines how much failure or service stability degradation can be tolerated based on the SLO. For example, if the SLO is set at 99.9% for a month, it means allowing 0.1% of errors. Calculating this in a formula allows for 40.32 minutes of errors.

As shown below, the higher the SLO is set, the lower the allowable error time naturally becomes (all calculations below are based on a month). However, it is not always good to set the goal too high. Google explains that to increase 99.9% to 99.99%, or 99.99% to 99.999%, it requires 10 times the effort compared to the existing one (reference). Therefore, it is important to set it appropriately according to the characteristics and situation of each service.

- 99.9% = 40 minutes of downtime → Detecting something wrong, monitoring, finding the root cause, and fixing it

- 99.99% = 4 minutes of downtime → Too short for human detection and resolution, so it should be designed for the system to detect and self-heal

- 99.999% = 24 seconds of downtime → Time that cannot be detected by humans and can only be detected by the system

The advantage of using an error budget is that the development team and the SRE team can talk about "service reliability", in other words, whether the service is operating well, in the "same language." Additionally, using an error budget allows the development team to manage risks independently. The development team can decide whether to focus more on adding new features or on service stability by looking at the state of the error budget. If the current error budget is exceeded and the service is not stable, all new feature development or releases should be stopped, and the focus should be on stability. Conversely, if the error budget is not exceeded, the focus can be on developing new features.

Note that the errors discussed in the error budget include various errors that occur not only in the application but also in the network or storage, and these should be defined according to the characteristics of each service.

Utilizing SLI/SLO

Once you have defined and implemented SLI/SLO, you are now ready to quantitatively assess the reliability that users feel about the service. So, how can SRE utilize SLI/SLO in actual operational tasks?

First, various stakeholders related to the service can evaluate reliability together using "SLI", "SLO", and "error budget." It is possible to talk about reliability in the same language through quantitative indicators.

Next, you can monitor the status of SLI/SLO together through regular meetings and manage reliability through reviews and retrospectives. In weekly or monthly meetings, you can share changes in indicators, identify the causes or issues that led to these changes, and define ways to improve them.

Additionally, by using the error budget to build an alert system, you can deliver step-by-step warnings to the on-call or service operation personnel of each service, allowing them to respond accordingly.

Finally, you can define response and resource utilization policies based on the status of SLO and error budget. For example, if there is room in the error budget, you can choose a strategy for new feature releases and short deployment cycles, and conversely, if not, you can focus resources on stability improvement activities to recover reliability.

SLI/SLO, which can be utilized in this way, may need to be adjusted according to the organization's and business's situation. Therefore, related dashboards and indicators should be continuously monitored and managed.

Conclusion

I hope this article helps those who were curious about what role SRE plays or those who found the concept of SLI/SLO difficult to grasp. In the subsequent parts 2 and 3, we will introduce cases of measurement and implementation applied to LINE app services. Part 2 will introduce platform cases, and part 3 will introduce application cases. We ask for your anticipation and interest, and with that, we conclude. Thank you.