With the introduction of generative AI in the industry, new methods are emerging to enhance productivity while maintaining software development quality. However, ways to improve the productivity of quality assurance (QA) tasks are not often discussed. Typically, QA tasks are limited to testing, with efforts focused on automating tests or generating reports using generative AI. However, this approach doesn't address the overall productivity of QA tasks. In this article, we will explore how to use generative AI to enhance the productivity of QA tasks.

Quality management at LY Corporation

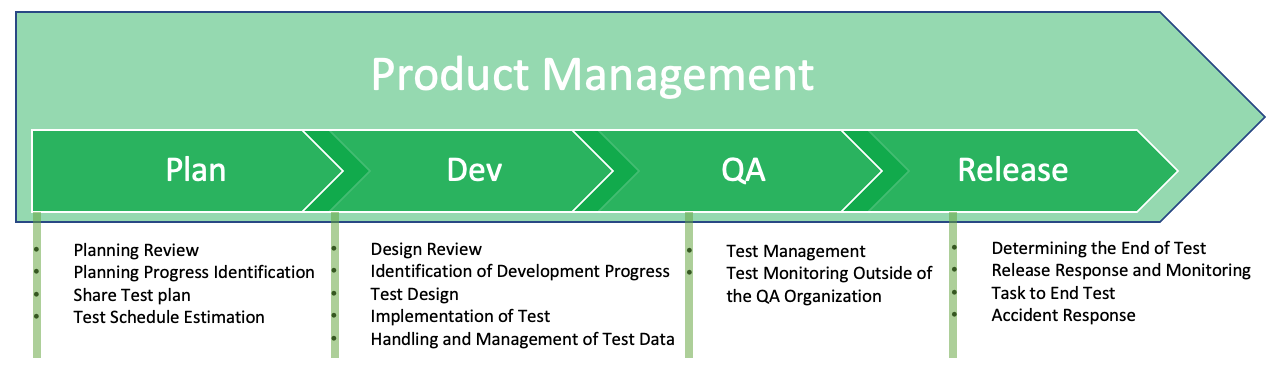

The definition of QA can vary depending on the nature of the project or service. At LY Corporation, QA engineers are involved in improving the quality of the product and the overall project process. They participate from the planning stage, working closely with project members to identify risks early and ensure a successful release.

The diagram below defines each stage of product management and the quality management tasks required at each stage.

Unlike the traditional approach of conducting quality management after the planning and development stages, QA engineers at LY Corporation perform quality management throughout all stages. This requires more quality management than before, necessitating efficient handling of tasks.

We are using generative AI to enhance the overall productivity of quality management in these challenging situations. Let's look at how we apply generative AI and how it improves productivity.

Reviewing the use of generative AI

The first step in using generative AI is to check internal security regulations. At LY Corporation, we use an in-house installation of ChatGPT. Users must comply with security measures to avoid entering personal or confidential information. The in-house ChatGPT operation team also filters sensitive information to prevent them from leaking outside the company.

The next step is to understand the limitations of generative AI and identify the tasks it can handle. While generative AI excels at analysis and summarization, its results are not always reliable. Therefore, to increase productivity using generative AI, it's better to use it as an auxiliary tool rather than relying on it entirely.

Now, let's look at the procedure for applying generative AI to quality management.

Procedure for applying generative AI to quality management

First, refer to the diagram "Figure 1. Tasks at each stage of product management" to select tasks that require analysis and summarization. These are the areas where generative AI can be most effective. The table below defines the quality management tasks that can use the help of generative AI at each stage.

| Planning | Development | Testing | Release |

|---|---|---|---|

|

|

|

|

Next, create prompts that can be used universally in quality management at each stage. Use well-known prompt engineering techniques, focusing on role designation and format designation techniques. This article will not cover prompts in detail but will explain how they are used in quality management. We created the following prompts to match the purpose of each quality management activity:

- Plan Advisor: Analyzes and summarizes planning documents, providing various outputs based on user questions

- Thread Summarizer: Summarizes message threads on Slack, identifying decisions, potential issues, and action items

- Test Designer: Designs tests and visualizes them based on input information, deriving basic test cases

- Test Helper: Analyzes bugs, extracts labels, and suggests additional test paths

- App Reviewer: Collects reviews in various languages, creates statistics, and summarizes key issues by country

The table below links the main quality management task at each stage with the prompts used.

| Planning | Development | Testing | Release | |

|---|---|---|---|---|

| Task |

|

|

|

|

| Prompts |

|

|

|

|

Once the prompts are created, apply them to quality management tasks at each stage. Don't be satisfied with the results of a single prompt execution. Try various questions to get better results. Continuously improve the prompts based on the results to provide faster and more satisfactory answers.

Introducing cases of using generative AI

Let's look at some actual cases of how we improved the productivity of quality management.

Organizing planning documents

When planning documents are unstructured, too complex, or frequently updated, tracking changes can be challenging. Previously, QA engineers had to identify key features and compare and organize changes for each update. Using prompts like Plan Advisor, we reduced the time needed for summarizing and tracking changes. It not only outputs in a predefined structured format but also allows for various forms of summaries through additional conversations.

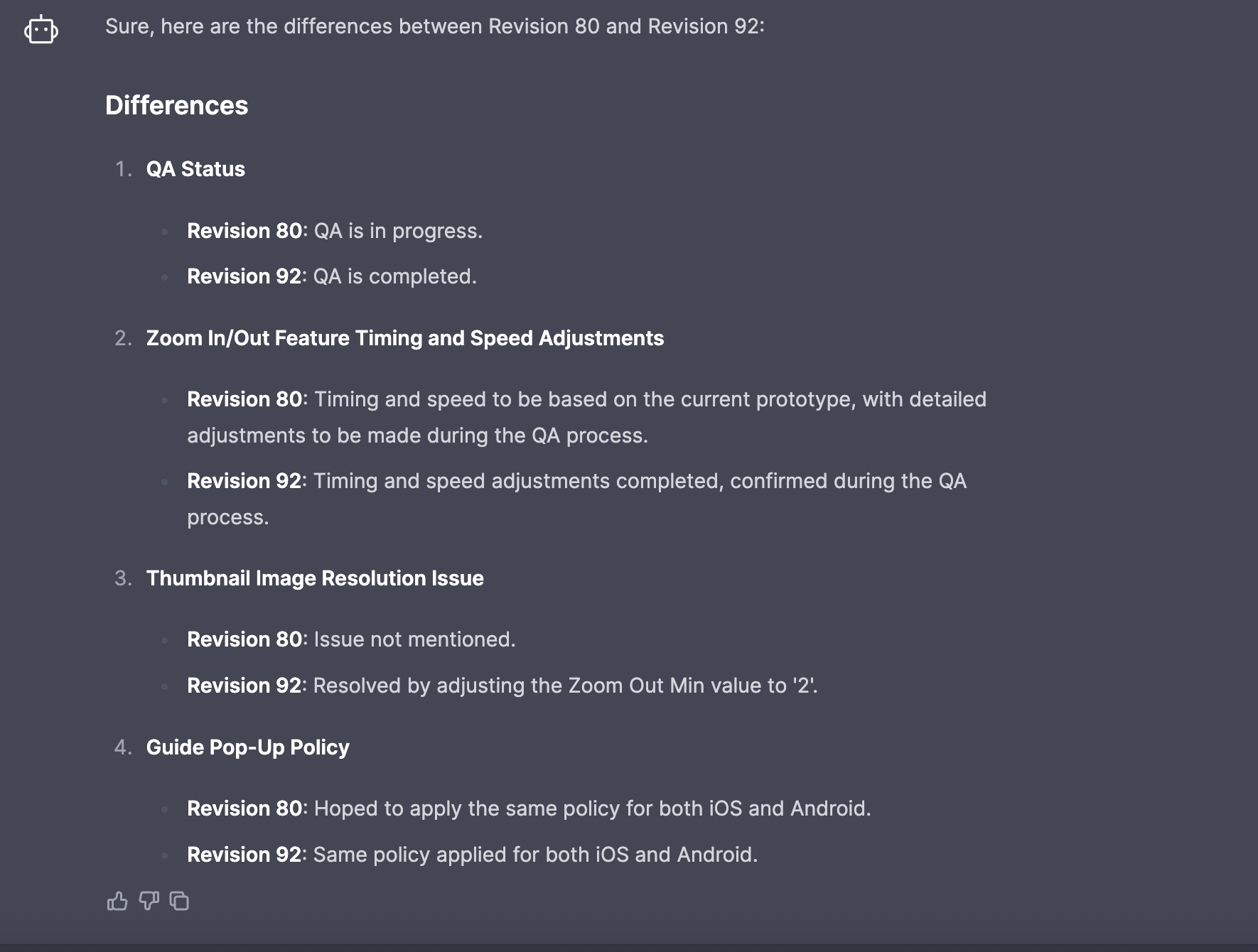

The example below shows how Plan Advisor tracked changes in the planning document for the zoom in/out feature applied to LINE Album.

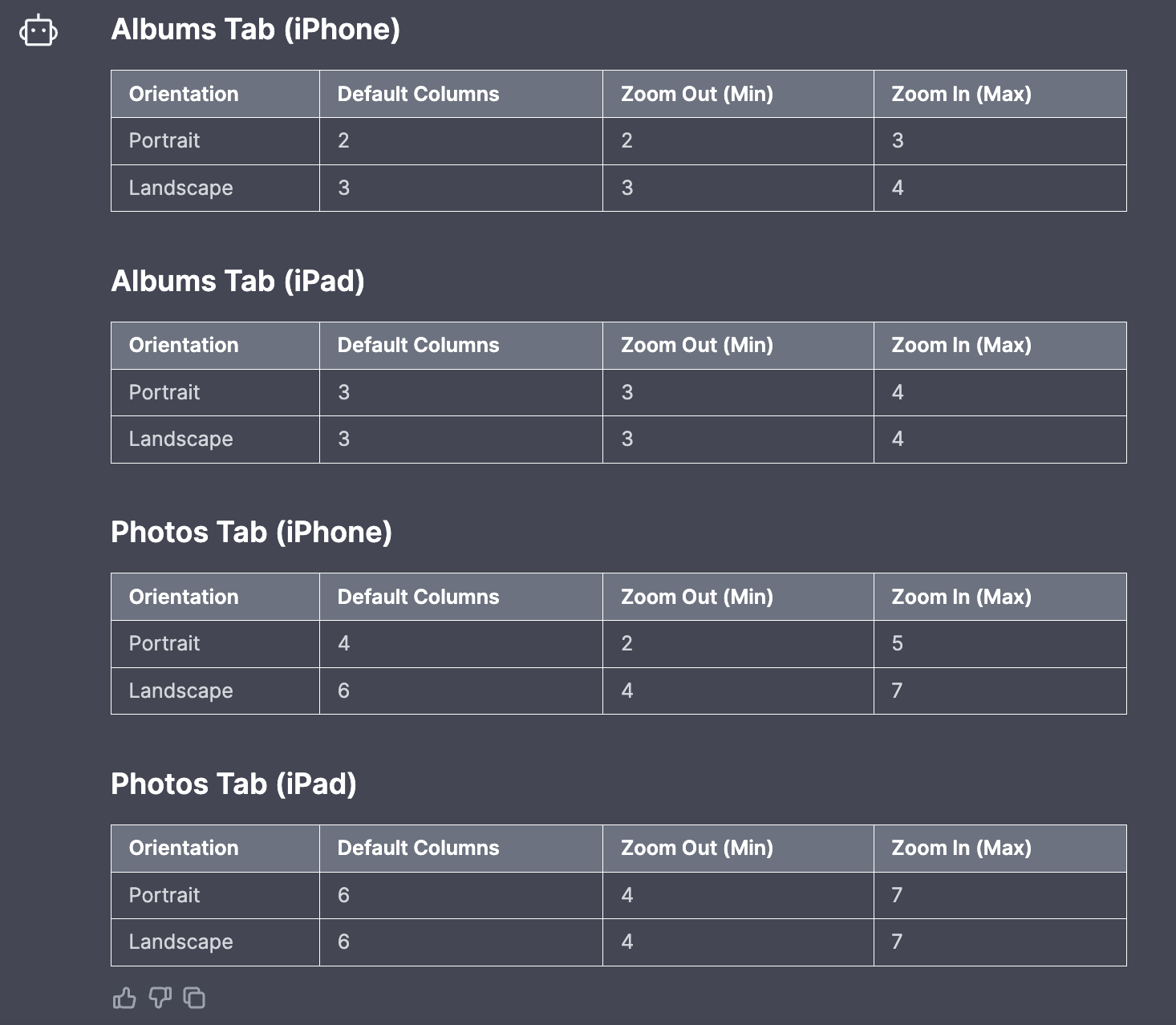

Complex specifications described in long texts can be organized into tables or charts as shown below.

In addition to the current version of the planning document, if there are other previous planning documents worth referencing, you can identify parts that may affect each other and identify risks early. However, if the information is insufficient or the generative AI's temperature value is close to "creative", there's a higher chance of inaccurate answers (hallucinations). Therefore, it's essential to have a plan to verify the AI chatbot's answers.

Summarizing and analyzing Slack message threads

Even if QA engineers participate from the early planning stages, some technical or specification discussions may already be conducted between planners and developers or among developers before seeking QA engineers' opinions or requesting inspection tasks.

At LY Corporation, Slack is the primary communication tool, and such discussions usually take place in thread form. If QA engineers try to understand the contents of these threads after the planning and development discussions are over, it can take a lot of time. Additionally, they may need to ask further questions based on the contents. To prevent this, QA engineers could participate in or monitor every thread, but this would consume a lot of QA resources and isn't realistic.

Using prompts like Thread Summarizer, you can summarize large amounts of conversations. Many companies have already adopted summary functions combined with generative AI.

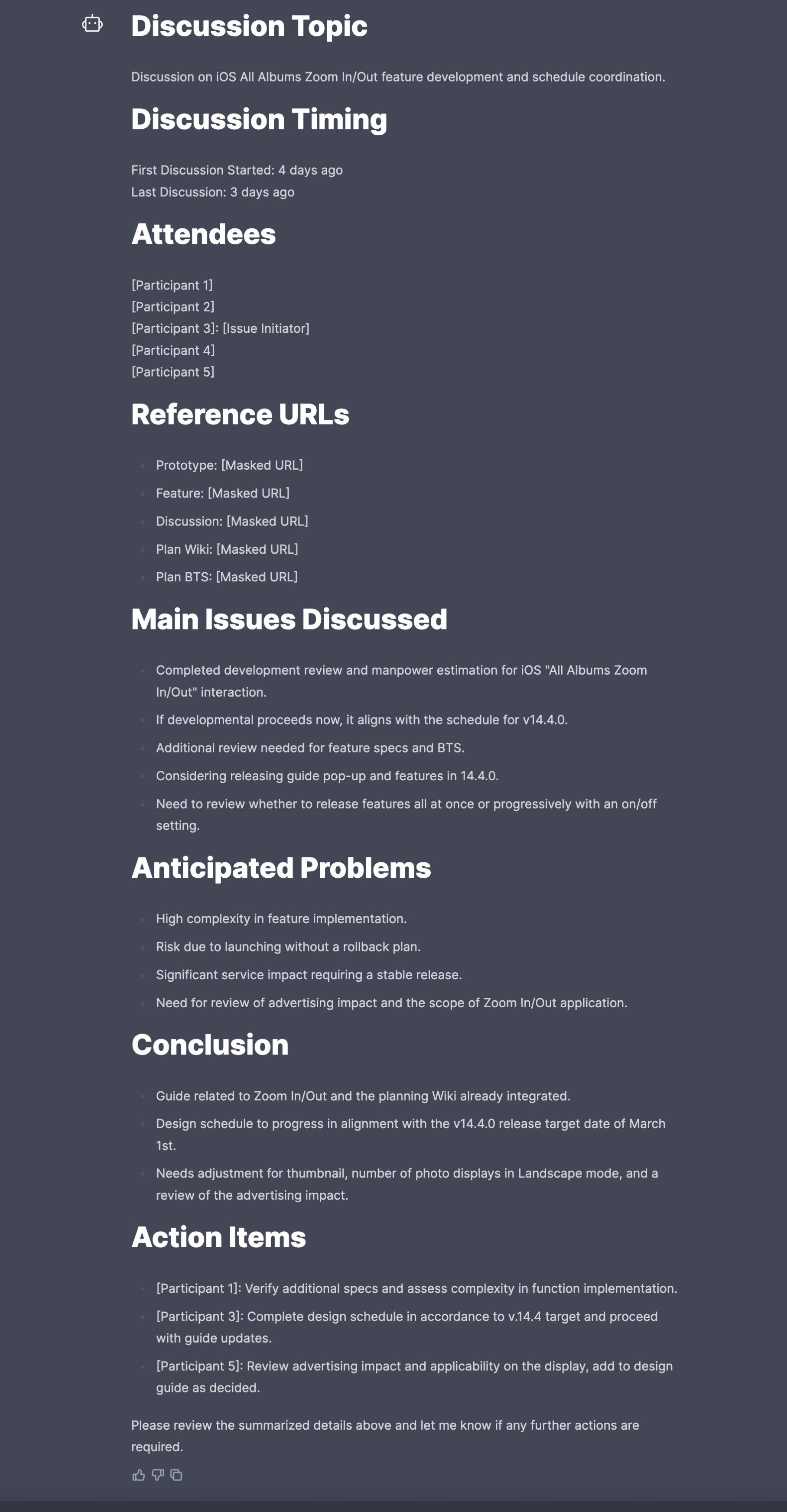

The example below shows how Thread Summarizer summarized the contents of the thread related to the zoom in/out feature applied to LINE Album into a meeting minutes format. It includes the issue initiator, thread summary title, main discussion points, conclusions, problems, and action items (sensitive information such as participants' real names and reference URLs have been deleted for security).

As in the previous case, you can ask additional questions from various perspectives through conversations. Specifically, you can derive considerations from a testing perspective or track updates needed by comparing with existing planning specifications.

Test design and deriving test cases

Based on the contents of the planning document, thread summaries, or other generative AI conversations introduced earlier, you can derive new test cases using generative AI. While the quality of these derived test cases may not be high, they are suitable as draft documents for quickly understanding the main test flow and creating test cases.

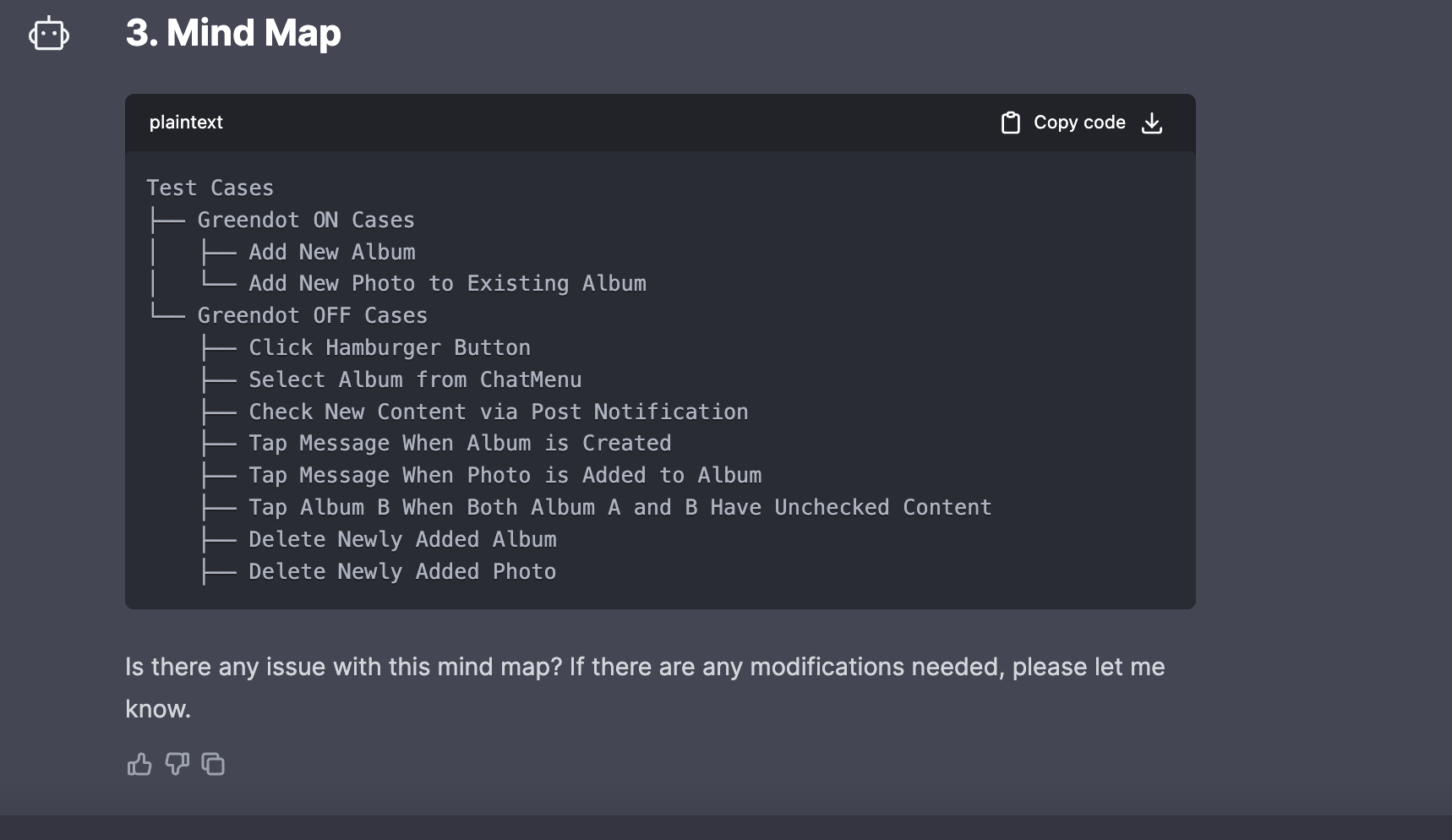

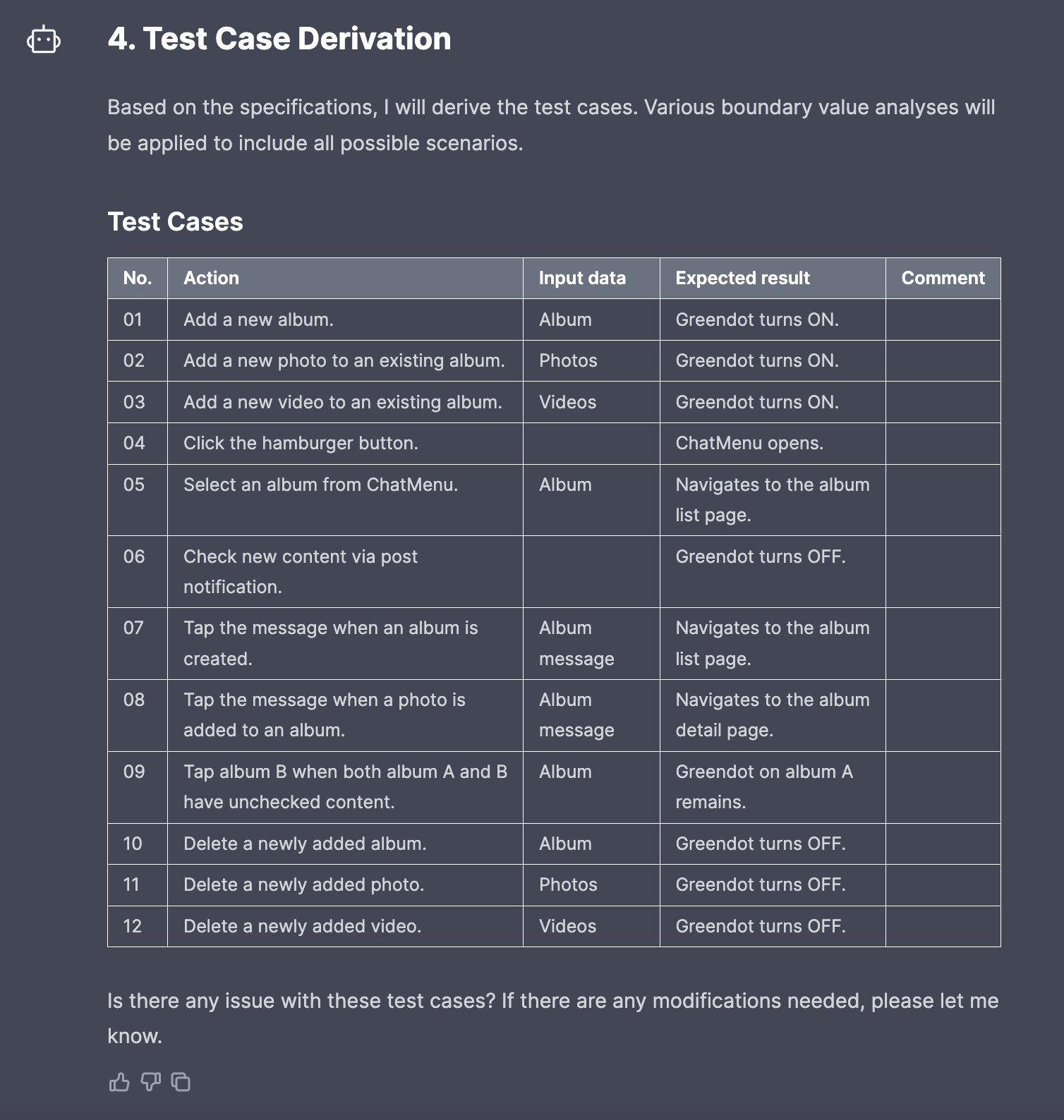

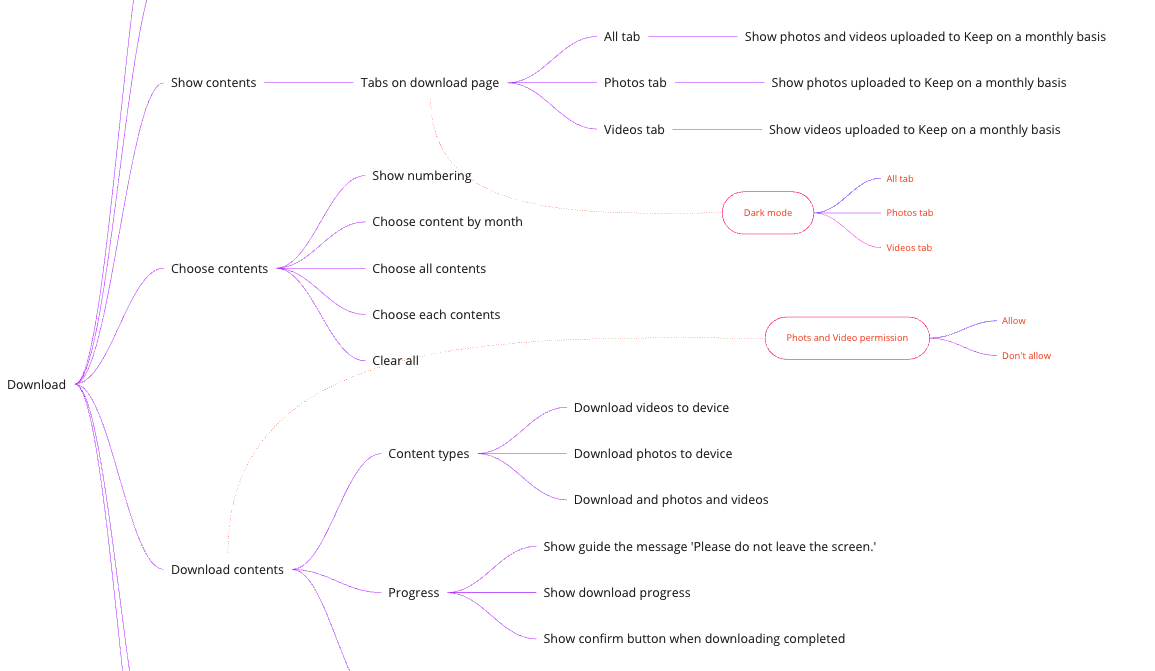

Test Designer receives input from users step by step, creates a mind map, visualizes it, and derives basic test cases. The example below shows the result of performing each step through Test Designer for the Green Dot feature specification of LINE Album (some specifications are excluded for security).

Afterward, QA engineers can check if additional cases need to be added and, if necessary, add specific cases or redo the visualization process from the previous state. The example below shows a draft of test cases created based on the planning specifications and visualized information (there are differences from the actual test cases used, and some cases are excluded for security).

Once this process is complete, you can export the test cases in JSON format to create test cases on other test platforms or enhance the test cases through test case review tasks.

One thing to note is that while you can analyze boundary values and provide more data through conversations with generative AI to create more sophisticated test cases, creating perfect and highly accurate test cases may take longer than making them directly. Therefore, it's essential to consider the appropriate quality level of test cases created with the help of generative AI in advance.

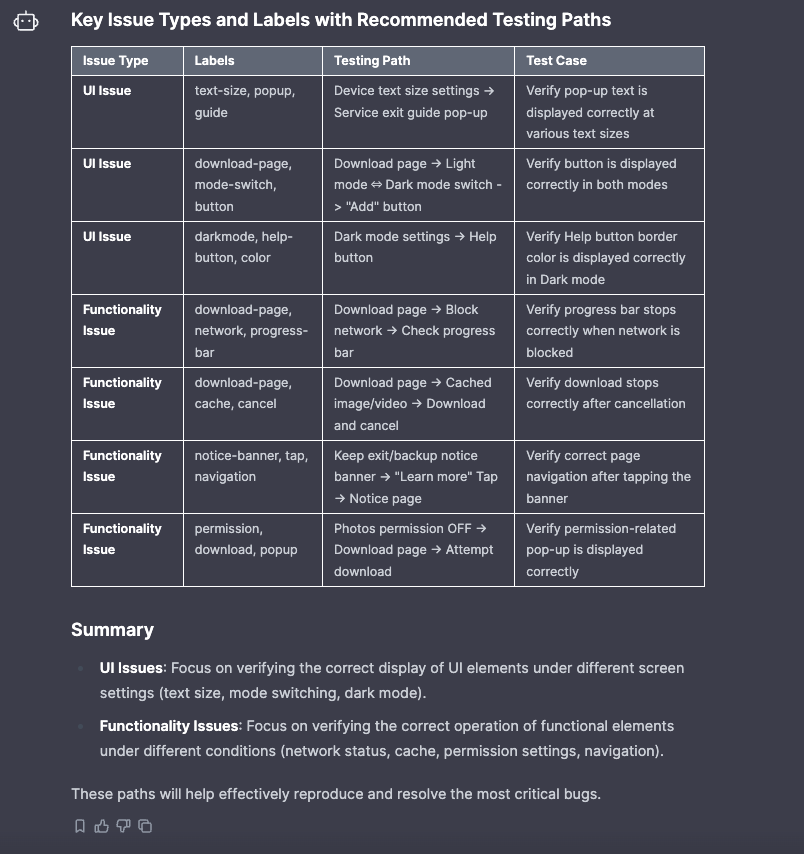

Identifying bugs and expanding exploratory testing

You can use generative AI to automatically extract labels and identify similar past issues by analyzing registered bugs and their characteristics. Previously, bug characteristics were detected through various filter conditions. Now, using prompts like Test Helper, you can find similar past issues more conveniently. You can also receive suggestions for additional test paths based on the identified issues, leading to higher-quality testing.

The example below shows the process of extracting labels from existing bugs and creating recommended test paths for the download feature of the LINE Keep service.

The recommended additional test paths can be immediately applied to exploratory testing. Confirm the paths deemed suitable for testing and add them to the test charter.

The example below shows the process of conducting exploratory testing, with the red nodes representing additional test paths suggested by generative AI.

The downside of exploratory testing is that inexperienced testers may find it challenging to identify bugs, and it's uncertain whether the set test paths are suitable for finding bugs. To address this, you can use pair testing, but this adds cost and time.

While generative AI is not yet as efficient as a pair tester, it can suggest new test paths that a pair tester might miss, reducing costs and time compared to traditional methods.

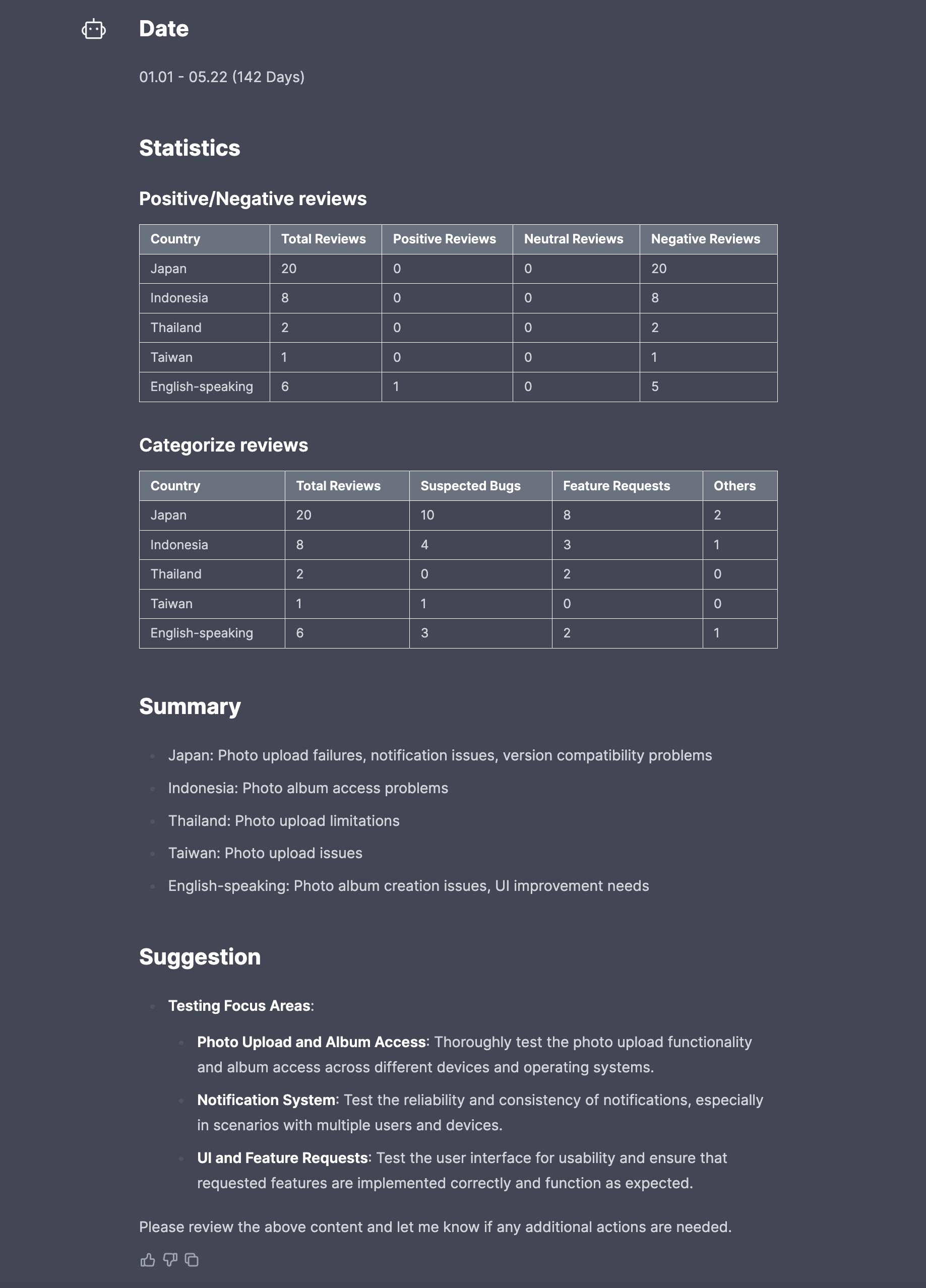

Summarizing and analyzing app reviews

At LY Corporation, we analyze app reviews in various ways. We directly review app reviews on the App Store or Google Play and social media reactions to the app. We also operate and use internal tools that collect and analyze these reviews. The problem is that reviews are written in various languages, making it difficult to understand the content easily, requiring separate translations for each language.

Using prompts like App Reviewer, you can quickly translate reviews written in various languages into the desired language. Additionally, you can analyze reviews by dividing them into positive and negative reviews by country or summarizing key issues by country in a tag cloud format. You can also distinguish whether the review is about a feature improvement or a feature error and get opinions on which parts to test further from a testing perspective.

The example below shows the analysis of reviews related to the LINE Album service collected from Asian countries with the most users.

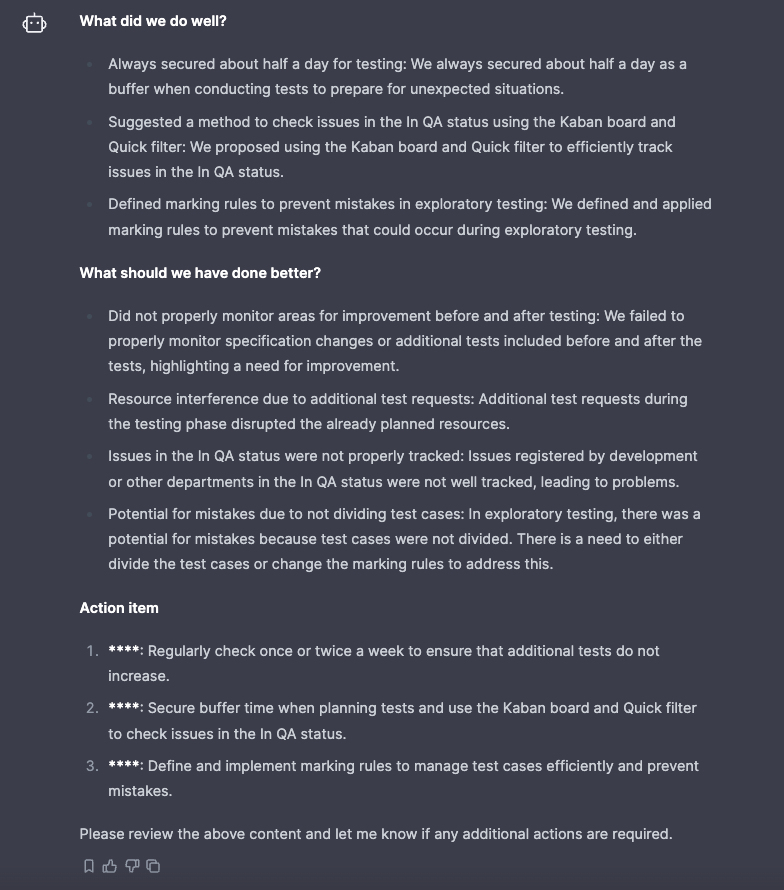

Organizing retrospectives

After the release, we conduct retrospectives on quality management. We encourage well-done parts to continue to develop and create additional action items for areas that need improvement. We use prompts like Thread Summarizer to organize the lengthy conversations from the retrospectives into a meeting minutes format.

Conclusion

In addition to the methods mentioned in this article, there are various ways to introduce generative AI into QA management. If the tasks are periodic, you can further increase efficiency through automated methods.

Generative AI is undoubtedly an attractive productivity enhancement tool, but there are also considerations such as accuracy and security. Additionally, forcing generative AI into tasks may reduce productivity or result in unsatisfactory outcomes. Therefore, when introducing generative AI, it is essential to answer the following questions to ensure effective implementation:

- What quality management are required in the current organization, project, or service?

- Are there elements in those quality management where generative AI can be used, such as analysis and summarization?

- If generative AI is introduced, to what extent will it be used?

- Will productivity improve as a result?

We hope more cases of using generative AI to enhance quality management productivity will be shared. Thank you for reading, and we look forward to seeing how generative AI can further revolutionize QA processes.

By thoughtfully integrating generative AI into QA tasks, organizations can not only streamline their workflows but also ensure higher quality outcomes. As the technology continues to evolve, the potential applications and benefits will only grow, making it an exciting time for QA professionals to explore and innovate.

If you have any questions or would like to share your own experiences with generative AI in QA, please feel free to reach out. Let's continue to learn and grow together in this rapidly advancing field.