Hello. We are Jaehoon Yoo and Donggyu Seo, working on the LINE Pay team. The Pay SRE team is responsible for covering the gray area between the development and operational environments of LINE Pay. We carry out various activities to enhance the reliability and maximize the performance of the entire LINE Pay system. In this post, we'd like to share our experience of strengthening security and automating server tasks using Rundeck and Ansible over the past two years.

Background

Since its launch in 2014, LINE Pay has grown rapidly and now operates a wide range of business domains, utilizing thousands of servers. Focusing on developing business domains to meet diverse user demands has allowed us to launch various payment services. However, this has increased the complexity between integrated systems, leading to an increase in the frequency of infrastructure tasks in the operational environment. Predicting the impact of changes became difficult, raising operational costs and increasing the possibility of accidents and potential degradation of service quality. Ultimately, we needed to improve our management methods and system to balance the growth speed and stability of the service.

With the global emphasis on user privacy, society at large is demanding higher levels of security for systems handling user information. Considering the complicated operational environment, we needed to simplify while establishing a safer system and work process that can satisfy enhanced security regulations.

Considering these various situations, we set two main goals and contemplated ways to improve the operational environment while maximizing work efficiency.

- Improving and automating processes to reduce mistakes during operations

- Enhancing security levels and preparing for accidents by granting appropriate permissions to operational workers and managing work history

Setting introduction goals

LINE Pay has been continuously growing since launching its online payment service in 2014. We expanded our service areas globally to Japan, Taiwan, Thailand, and more. We've also added support for various scenarios where payment would be needed such as transportation fare, taxes, and in-game purchases. As a result, our user base has steadily increased, and we had to expand our infrastructure system on a massive scale to provide stable services.

In this situation, the LINE Pay SRE team set four goals to effectively orchestrate existing servers and quickly introduce new servers in the same environment.

- Server configuration and setting codification

- Process simplification

- Scalability

- Securing flexibility

Server configuration and setting codification

Previously, we managed service execution settings and infrastructure configuration codes mainly by synchronizing code through Git. However, as the service grew and apps increased, we often had to configure large-scale operational environments or execute irregular temporary tasks that could run commands remotely on desired servers. Trying to perform these tasks with the tools we had been using made the boundaries with existing tasks ambiguous. The execution process became complicated, leading to delays in response and increasing the risk of accidents. To solve this, we decided to introduce a tool that can manage and combine settings in one environment and introduce the infrastructure as code (IaC) method. This allows a single admin to quickly and consistently configure large-scale infrastructure.

Process simplification

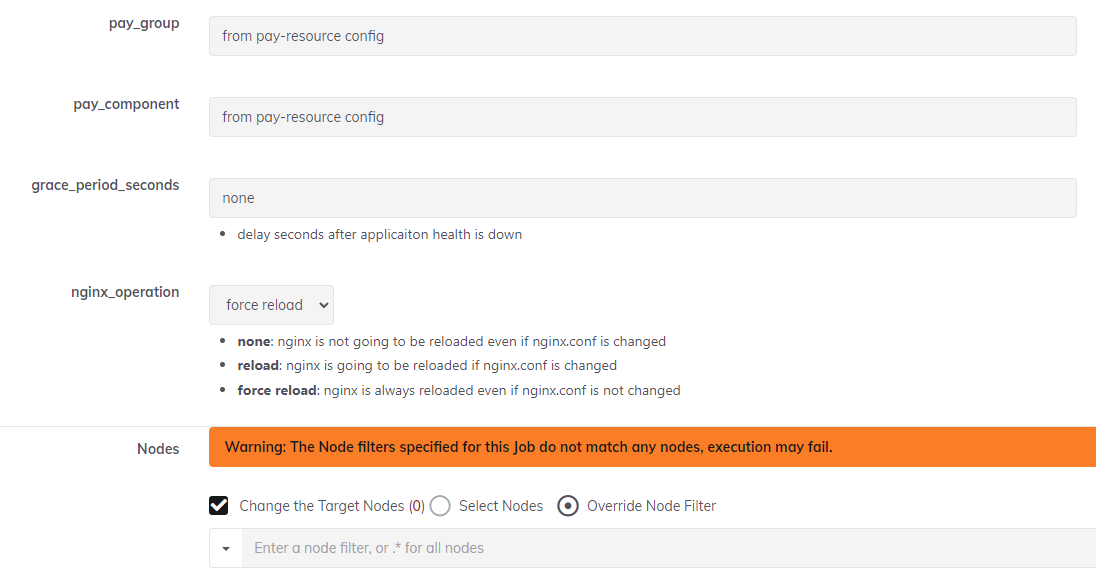

One of the goals we aimed for when introducing infrastructure automation tools was ironically not to totally automate our work, but to simplify manual labor. If we had only aimed for server installation or tool installation, full automation would have been possible. In reality, the tasks that took up the most time in service operation were not server installation or synchronization of settings. They were irregular temporary tasks (or ad-hoc tasks) where commands were directly executed on specific servers to respond to intermittent issues. These tasks included log extraction, dump tasks, file confirmation, access control list (ACL) confirmation, and collecting monitoring information for issue confirmation. So, we collected the frequency of these tasks on a monthly basis to first select the tasks that needed to be automated. We aimed to improve the process by providing a tool that developers could directly execute.

Scalability

Inside the LINE Pay service, there are various non-functional platforms, including monitoring, integrated test environments, and CI/CD tools. When introducing operation automation tools, we wanted them to not just be tools for automating tasks, but also to synergize with our existing solutions. For example, we planned in a way that increased the value of use, such as activating semi auto-healing functions that execute tasks to check the status of the target system while sending notifications when a failure is detected in the monitoring system, just by calling a simple webhook in the existing service, or generating reports after performance tests.

Flexibility

The automation targets included not only newly configured infrastructure but also existing environments. Therefore, we looked for a tool that supports an automation configuration method compatible with all environments and doesn't require additional replacement.

Automation tool selection

We reviewed what tools could achieve the four goals mentioned in the LINE Pay system environment. There are various tools that can be used to proceed with operation automation. Among many tools, we narrowed down the selection range to popular tools among developers, such as Terraform, SaltStack, and Ansible, and started the review.

Automation tool review - Terraform vs. SaltStack vs. Ansible

| Terraform | Ansible | SaltStack | |

|---|---|---|---|

| Supported OS |

| Same as left | Same as left |

| Command push | No | Yes | Yes |

| Execution module | Provides built-in modules for each environment (such as physical equipment, cloud platforms), and providers also offer modules to Terraform (for example, NCP Terraform) | Provides various modules (about 3,300) | Provides built-in modules (about 140, but community support is lacking) |

| Provides CLI | Yes | Yes | Yes |

| Purpose | Infrastructure and cloud service provisioning orchestration (manages various cloud and provision environments using HashiCorp's proprietary programming language) | Configuration management tool (suitable for detailed tasks such as application installation and command delivery) | Same as left |

| Working method | Declarative (defines the state that needs to be maintained consistently) | Procedural (batching the exact work procedure into code) | Same as left |

| GUI | No (No default GUI, can be added through third-party solutions) | Yes (No difference in features between enterprise and open source) | No (Provided but lacks functionality) |

| License | Open source, Enterprise | Same as left | Same as left (No difference in features between open source and enterprise) |

| Advantages | Suitable for environments that require consistent states, as it automatically calculates and recovers declared environments | Communicates via SSH, doesn't require a separate agent, and is the most widely used configuration management tool | Provides convenience for ad-hoc tasks such as the file transfer command salt-cp, easy to manage large-scale settings due to zeroMQ-based publish/subscribe communication, and provides user policies |

| Disadvantages | Cannot process the entire modeling of work with a declarative model (such as software installation, patching, and network access permission changes) | Cannot manage target servers with low Python versions depending on the version, and isn't suitable for large-scale distribution as it connects to each target via SSH | Requires the installation of an agent called "minion" on the target server, and the community support is relatively weak |

Terraform

Terraform, a provisioning tool, was attractive for its declarative approach, allowing servers to be provisioned and orchestrated from the creation stage. In particular, if you store the desired state in a file called "state", you can compare the current state with the desired state and synchronize it to the desired state at any time. This has the advantage of being able to easily expand or reduce, and configure the overall infrastructure.

If we were in an environment where we could freely choose the cloud service provider (CSP), such advantages could have been a clear reason for adoption. However, LINE Pay had restrictions such as needing to use a network and server environment with enhanced security due to the nature of financial services where security is important, unlike the general cloud service environment. We judged that it would be difficult to use it freely.

Also, since we already had efficient processes and alternatives for deployment and expansion, we decided to exclude Terraform. We thought it would be better to review infrastructure management automation tools that are good for automating settings in the state where the server is provided and improving operational characteristic tasks, rather than provisioning.

SaltStack

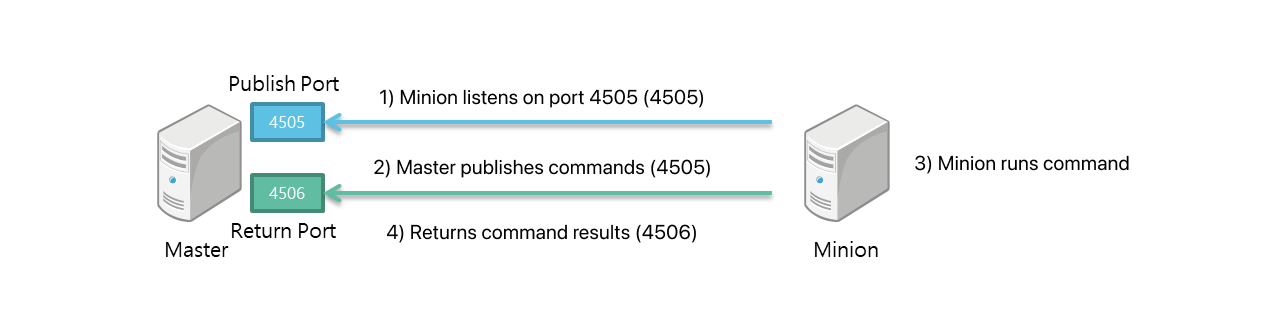

The next tool we reviewed was SaltStack. SaltStack configures an agent in a "master" and "minion" structure, allowing only authenticated targets to be operated from the master, and roles can be restricted depending on the user using SaltStack's ACL. Also, because it publishes and subscribes commands based on ZeroMQ, it's a suitable tool for managing large-scale infrastructure.

However, when actually using it, it was necessary to provide an interface that developers could directly execute. In the open source version, not the enterprise, SaltStack UI wasn't officially provided. Also, even if we developed a UI that could use the permission management function, it wasn't safe to manage permissions by modifying the configuration file of the configuration automation tool, and since we planned to separate the permission management from the initial design stage to a separate system, it could not be a big advantage.

The decisive reason for not choosing SaltStack was that if the environment of the target server was very old, or not up-to-date, changes such as installing new packages to install minions were inevitable. This didn't fit the goal of not changing the target server. Although it was a very good choice as a configuration automation tool, a simpler structure was more suitable for our goal of providing from the user's perspective, not the admin's.

Ansible

Launched in 2012, Ansible has been steadily increasing its market share, has secured a certain level of stability, and has many cases to refer to. Ansible and SaltStack have the same usage. The biggest difference that appears on the surface is the communication method. While SaltStack operates only authenticated targets from the master in an agent-based manner, Ansible communicates with remote hosts using SSH.

Ansible can configure setting automation by accessing servers based on the well-known method of using SSH keys. Of course, there is a disadvantage that the communication speed is slower than agent-based tools, but the SSH and WinRM-based connection method has a higher security level than other communication methods and has the advantage of not requiring a separate agent. Also, it has the flexibility to integrate modules developed by users when needed and can refer to various use cases posted on the Ansible community. SaltStack can limit permissions, but as mentioned earlier, we planned to separate permissions to an external system, which actually worked as an advantage.

Web console review - AWX vs. Rundeck

The tool we wanted to provide had to be used not only by admins but also by general developers, so we needed a more user-friendly UI. For this, we reviewed Ansible Worker (AWX) and Rundeck.

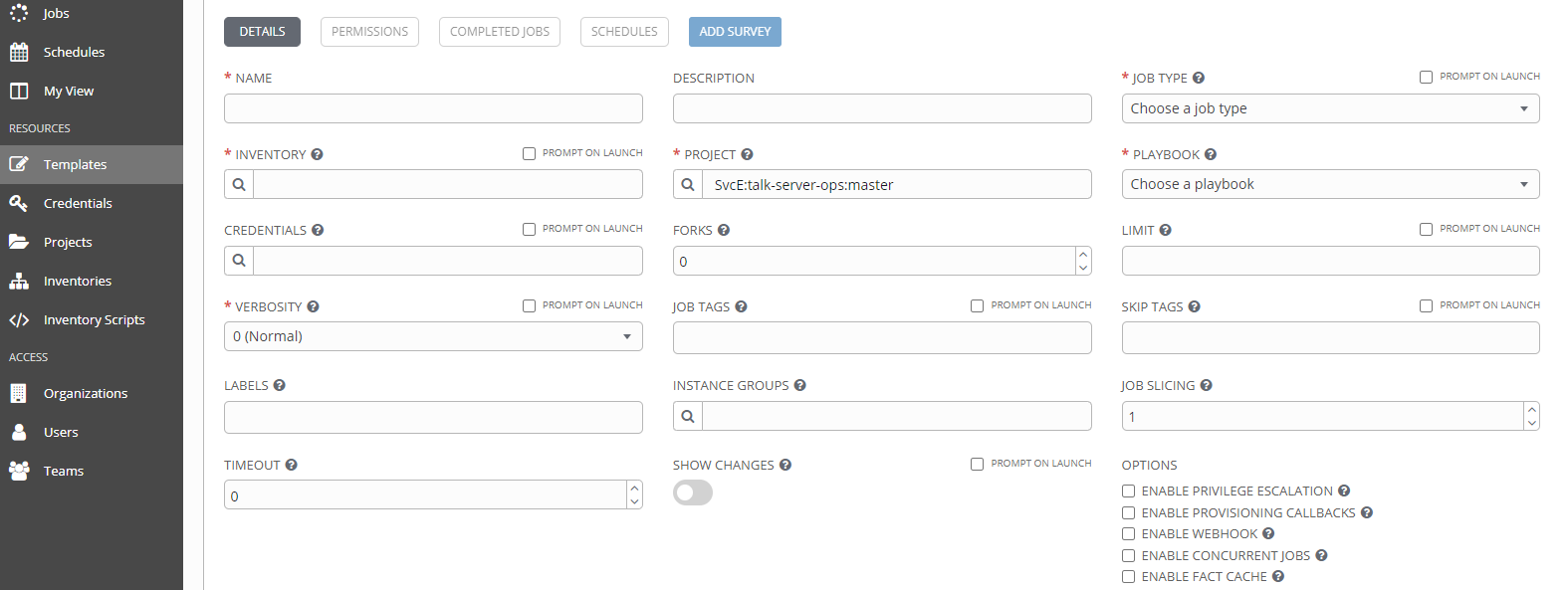

AWX

Ansible provides the following AWX UI in both enterprise and open-source versions. AWX has the advantage of having a high adoption rate, giving us many cases to reference when we adopt it ourselves. After going through internal PoC, it met all the necessary requirements in terms of functionality such as permission restrictions, authentication methods, and scheduling.

However, if you don't learn Ansible, it's difficult to understand, and items that don't necessarily need to be displayed are exposed on the screen, making it feel a bit difficult to use. It could be a useful tool for admins who can use it without any problem if they are engineers familiar with operational tasks, but we judged that it could be a bit difficult for general users. For example, we thought it wasn't good for users to be exposed to information such as the target server's Credential, Inventory, or Playbook, when they simply want to view files or check network ACLs, as the aformentioned information needs some understanding of Ansible's concepts.

Also, we decided to review a more scalable tool, not a tool that depends on a specific language (Ansible), because we expected that there would often be cases where users want to simply implement the necessary functions with scripts such as Shell or Python, or paste scripts they were already using.

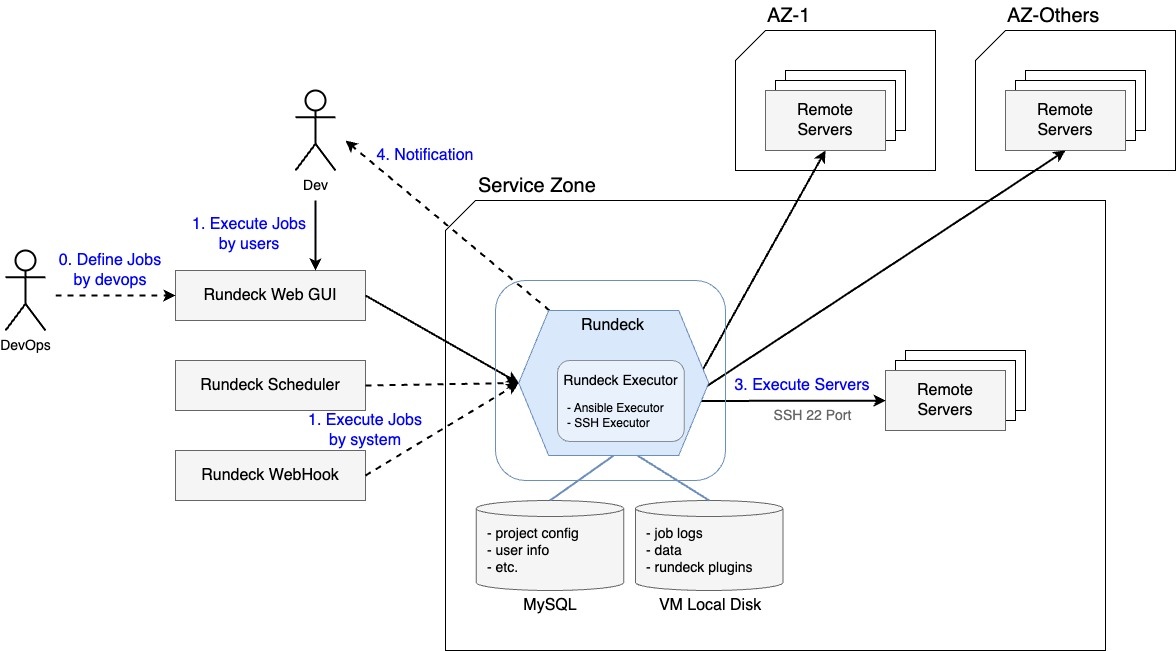

Rundeck

Rundeck is an open-source automation tool released by PagerDuty, an automation platform that can schedule, execute, and manage tasks. It's not a recently released tool, but many admins continue to use it because it's easy to install and convenient to use.

As Rundeck has been around for a long time, it has been continuously updated, and in addition to basic functions such as webhooks and scheduling, it also supports integration with various plugins such as HashiCorp's Vault. So, we judged it as a tool worth reviewing. The currently supported features were as follows.

| Category | Support status |

|---|---|

| UI convenience | Supports creating simple input formats like radio buttons, check boxes, and select boxes. |

| Execution method | Supports Ansible, local, SSH, Stub |

| Infrastructure resource management | Supports infrastructure resource management methods such as Ansible Inventory, scripts, and URL. |

| Access control | Provides YAML format role-based access control and group-level settings |

| Login method | Supports pre-authentication, SSO, LDAP |

| Audit | Saves execution records in Rundeck DB and logs separately |

| Others | Supports webhook API, scheduler function |

Tool selection result - Ansible + Rundeck

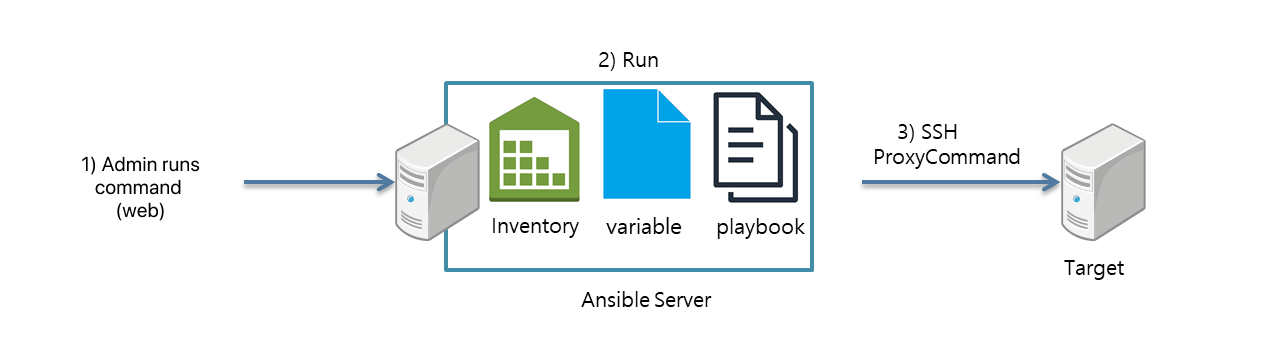

In conclusion, we chose Ansible as a tool for system configuration management, and Rundeck as a tool to define workflows and select remote servers to execute workflows defined by Ansible.

The biggest advantage of Rundeck was that the UI could be configured intuitively. This is because it can be customized so that even first-time users can use it without training. Also, by adopting Rundeck, we were able to secure scalability. Rundeck doesn't require Ansible to execute tasks, allowing admins to directly connect through the shell or with Python format operation scripts. Through this, we were able to increase usability without spending too much time on migration.

Systematization and automation of operations

LINE Pay operates various environments together, such as physical servers and virtual server environments, as well as Kubernetes environments, depending on the characteristics of the services provided. Kubernetes is a widely used platform for deploying and managing containerized applications. It can manage the preconfiguration of applications in container images. However, in physical server or virtual server environments that don't use Kubernetes, the admin must handle it directly.

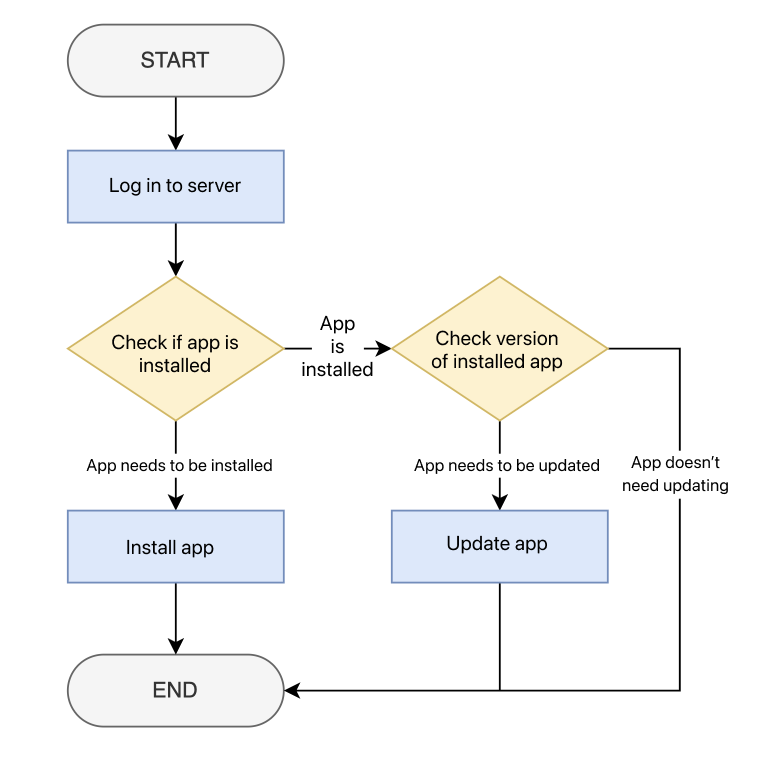

For example, if you need to install an application in a virtual server environment, the admin will install the application through the following steps.

- Login to the server environment.

- Check if the application to be installed is installed.

- If installation is needed → Install the application.

- If installation isn't needed → Check the application version information.

- If an update is needed → Update the application.

- End

In practice, there are many cases where you have to follow a much more complex flow than the example above, and considering past experience, the start of many problems is human error, so no matter how simple the task is, there is a possibility of mistakes during work. Also, even if the workflow was complicated, it could have been more efficient for a person to perform it directly if we only had 10 servers to manage. However, LINE Pay has thousands of servers to manage, so manually managing all of them could only be a huge waste of time.

Our team wanted to allow LINE Pay developers to use their time on less menial tasks. So, we started to systematize and automate operational tasks by applying the previously selected automation tools. We found one problem.

Problems that can occur when applying Ansible directly to the LINE Pay operating environment

As mentioned earlier, after comparing several automation tools, we decided that Ansible was the most suitable tool and chose it. However, we realized that applying the common Ansible method of setting and configuring the entire infrastructure directly to the LINE Pay service environment could pose a problem. This is due to the fact that server configurations in the LINE Pay service environment can vary based on the characteristics of the service.

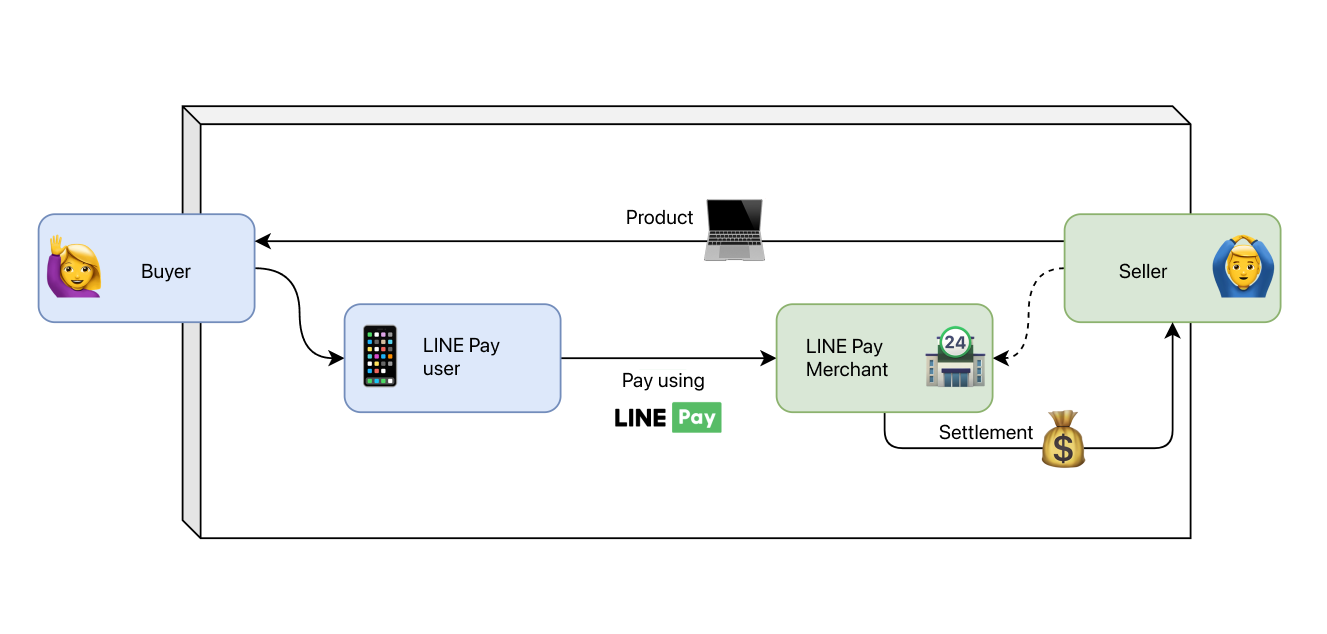

LINE Pay service connects users and merchants in the process of trading goods between sellers and buyers, helping everyone to make transactions more conveniently.

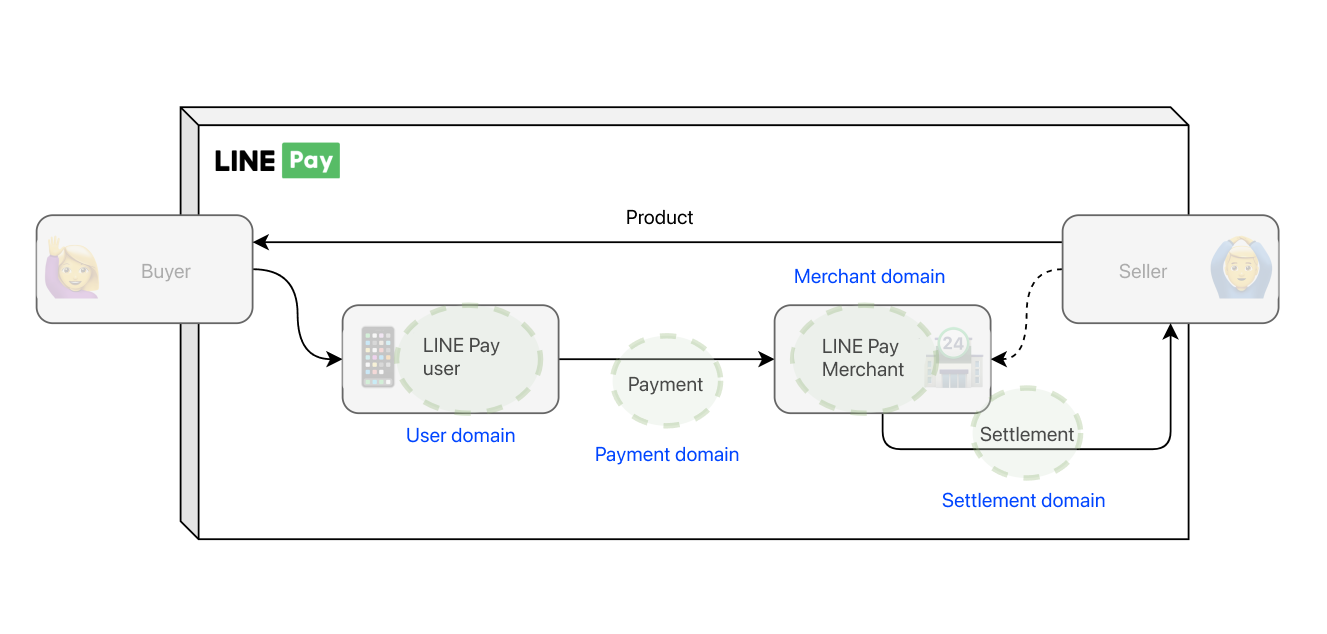

To handle such processes, we deal with several main business domains such as members, merchants, accounts, payments, settlements, events, and discounts. Each main business domain is further divided into various sub-domains, totaling about 100. Each sub-domain can have a different server group.

In other words, to manage the LINE Pay service, you have to check the application list and version installed in dozens of server groups and select the appropriate Ansible playbook. In this situation, we determined that it's difficult to use the common method of setting and configuring the entire infrastructure.

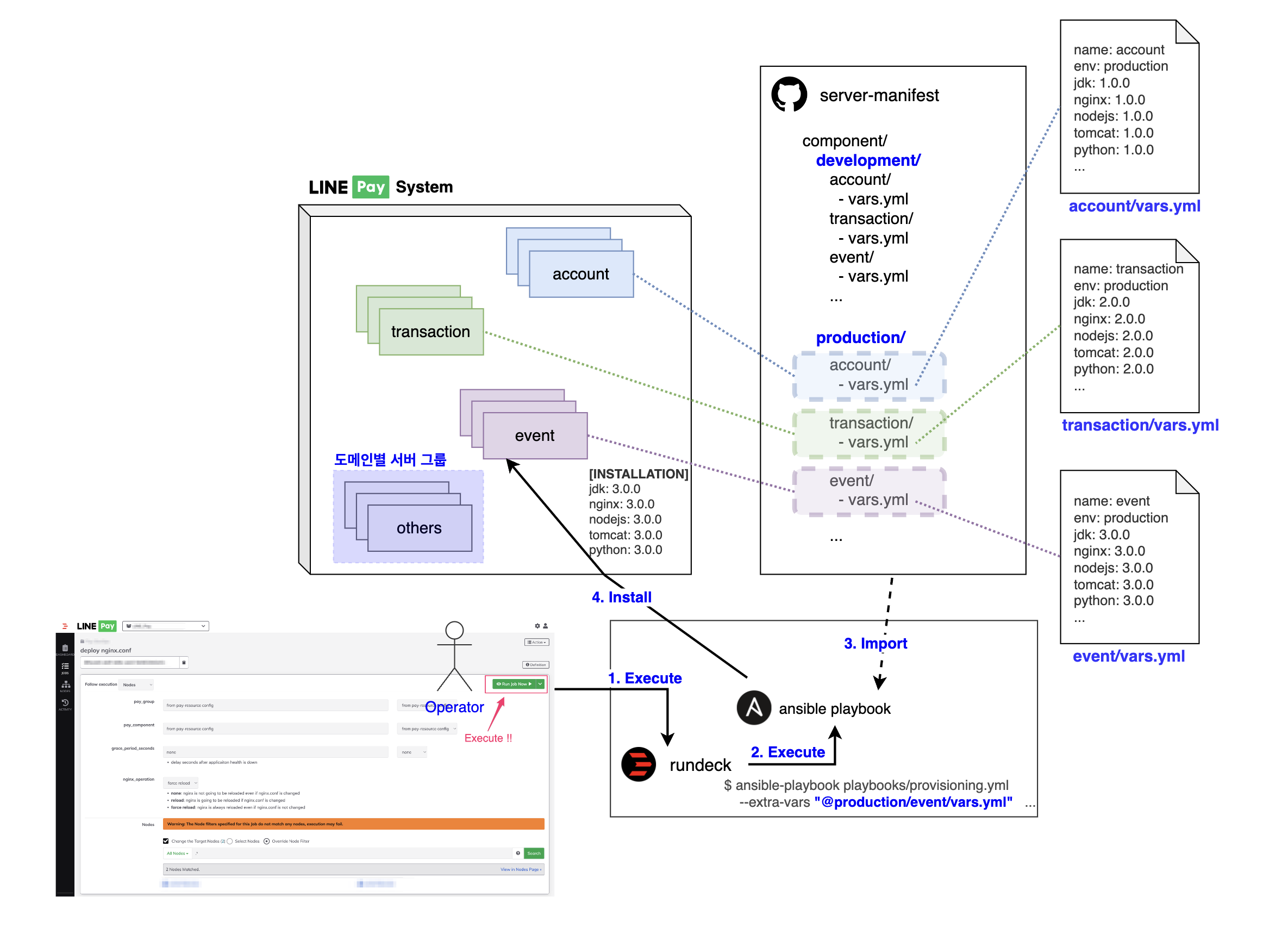

Solving the problem of managing application versions by server group

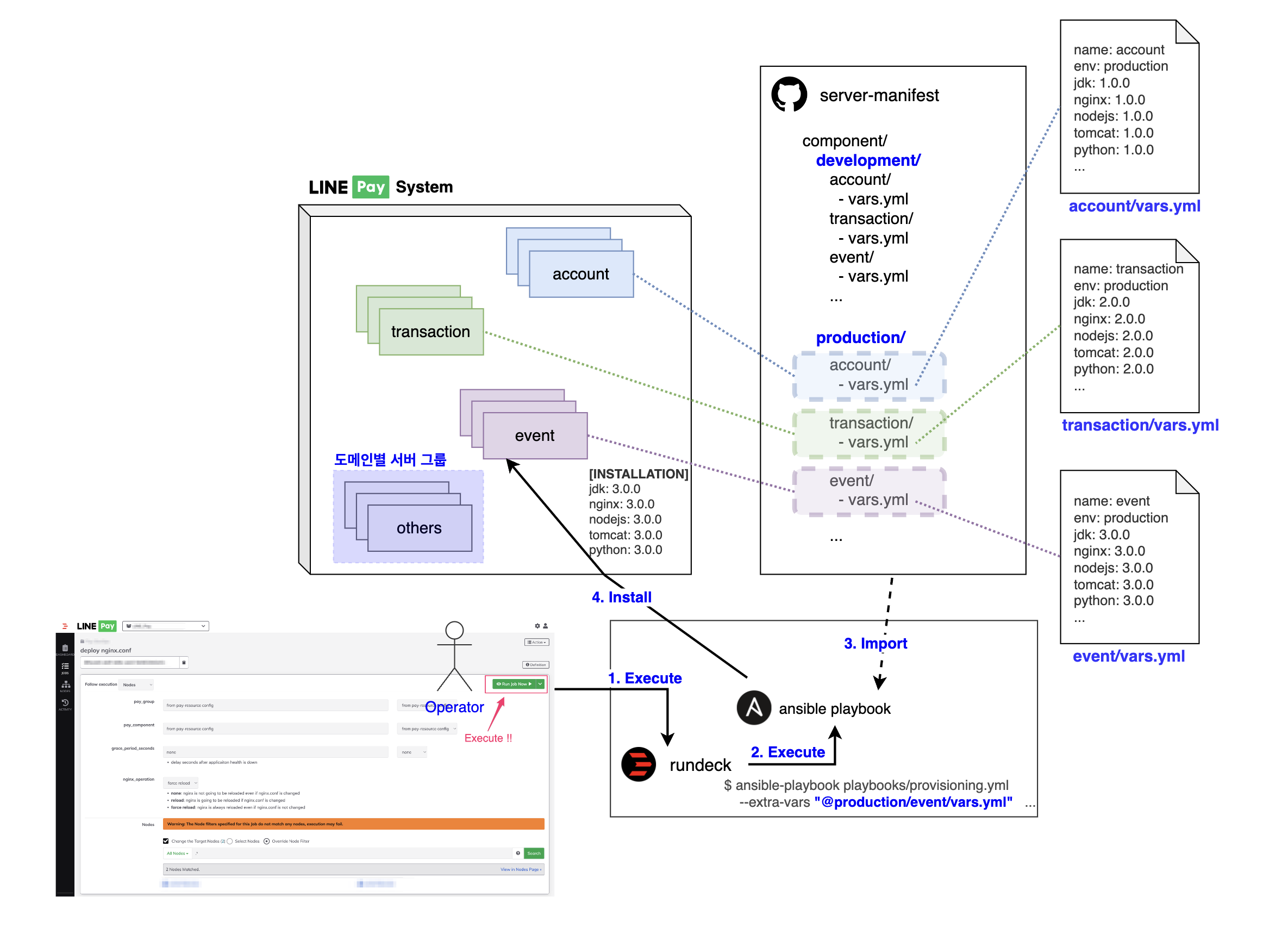

In the end, we had to first address the issue of managing application versions by server group. The basic concept of the solution is to record the application and its installation version used in the server group and refer to it every time an Ansible playbook is executed. To make this concrete and systematic, we set up a server configuration information file in the following structure to manage and use.

- Create a new Git repository to collect server group information (hereinafter referred to as 'server information Git').

- Create a YAML file with the server group name in the server information Git (hereinafter referred to as 'server information YAML file')

- After investigating the application and version installed in the server group, record it in the server information YAML file according to a predetermined format.

- Each time Ansible playbook is executed, it dynamically fetches the server information YAML file and utilizes the application version information.

Of course, when developing the Ansible playbook, it required a bit more effort as the script had to be written with reference to the server information YAML file. However, since there was no need to directly access the server and check anymore, we were able to reduce repetitive server tasks and automate them in the LINE Pay service environment.

Role-based permission separation

Since security and stability are particularly important for fintech services, we're always considering ways to enhance these aspects. As part of this, we decided to manage access rights to the development and operation environments in a more finely separated manner, according to the role of the person in charge. The goal was to minimize the admins accessing the operating environment to prevent security incidents, to clearly designate the handler in case of an emergency, and to broaden the authority of the development environment. This allows developers to use the environment more freely and increase the production speed of the service.

The work of separating the development and operation environments can be summarized into three points from a functional perspective.

- Clearly distinguish the tasks that can be performed according to the role of the person in charge.

- Distinguish the list of servers that can be viewed according to the role of the person in charge.

- Keep work processing history for a certain period to provide information needed for auditing activities.

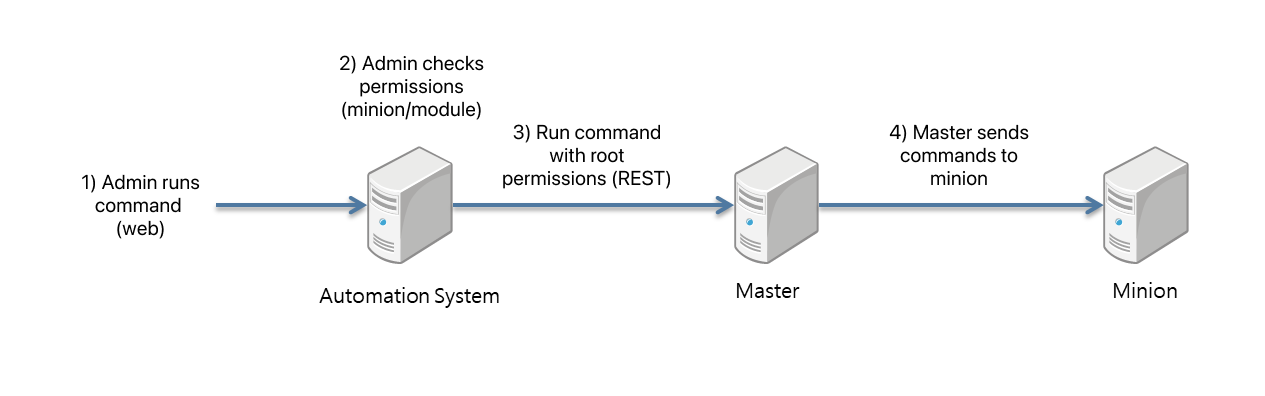

To meet all these conditions, we used Rundeck's ACL.

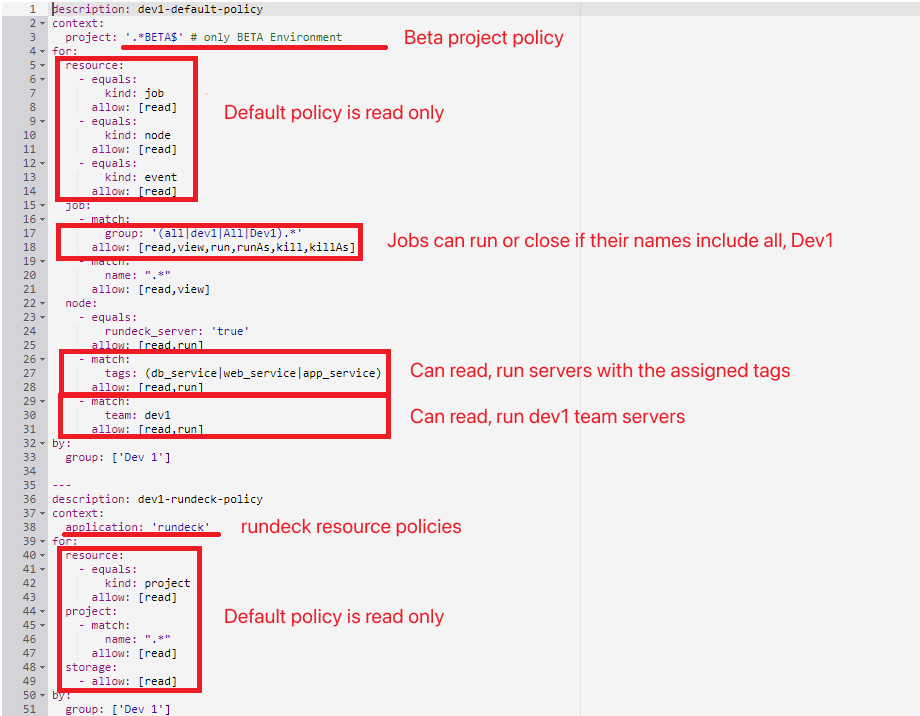

Separating development and operation roles and permissions by task

Rundeck ACL provides simple yet powerful access control features. Since you can apply multiple rules at the same time in YAML format, most of the requirements that the admin wants can be met. As we had to distinguish the roles of developers and admins, we basically separated access rights by team according to the role of the team, and applied a method of granting exceptional access control policies to the necessary personnel depending on the situation. Let's take a look at the following permission definition example.

| Role name | Server view | Job view | Job execution | RBAC modification permission | Description |

|---|---|---|---|---|---|

| Dev1 Team | Can only view servers assigned to Dev1 Team | Can view all jobs | Can only execute jobs assigned to Dev1 Team | No | Has permission to view servers assigned to Dev1 Team, and execute jobs allowed for Dev1 Team or all on Dev1 Team servers |

| Dev2 Team | Can only view servers assigned to Dev2 Team | Can view all jobs | Can only execute jobs assigned to Dev2 Team | No | Has permission to view servers assigned to Dev2 Team, and execute jobs allowed for Dev2 Team or all on Dev2 Team servers |

| Admin | Can view all servers | Can view all jobs | Can execute all jobs | Yes | Has permission to view all servers, and execute or modify all jobs |

| Super Admin | Can view all servers | Can view all jobs | Can execute all jobs, ad-hoc | Yes | Has permission to use Rundeck's ad-hoc function in addition to Admin authority |

| Temp | Can only view servers set in advance | Can view all jobs | Can only execute jobs set in advance | No | Has permission granted to developers who need temporary authority or are difficult to separate into teams |

(RBAC: Role-Based Access Control)

In the above example, roles are grouped based on the team. Roles such as Dev1 Team, Dev2 Team, which can be divided according to the operating service, are created as role groups based on the team, and access rights are separated so that only those teams can view the server. Job execution permissions were also separated according to the characteristics of each team, considering data batch jobs or server synchronization jobs. Tasks that do not affect the service, such as file viewing, system usage rate, and network ACL viewing, were allowed to all so that all developers can execute them directly without requesting the admin.

The Admin role can view all servers, execute or modify jobs, and modify RBAC permissions. There was only one permission that was restricted, and it's the remote command function provided by Rundeck. While it's a very convenient feature, arbitrary commands that are not templated can cause unpredictable situations. So, the function usage permission was granted only to the Super Admin role, so that it can be used only when absolutely necessary.

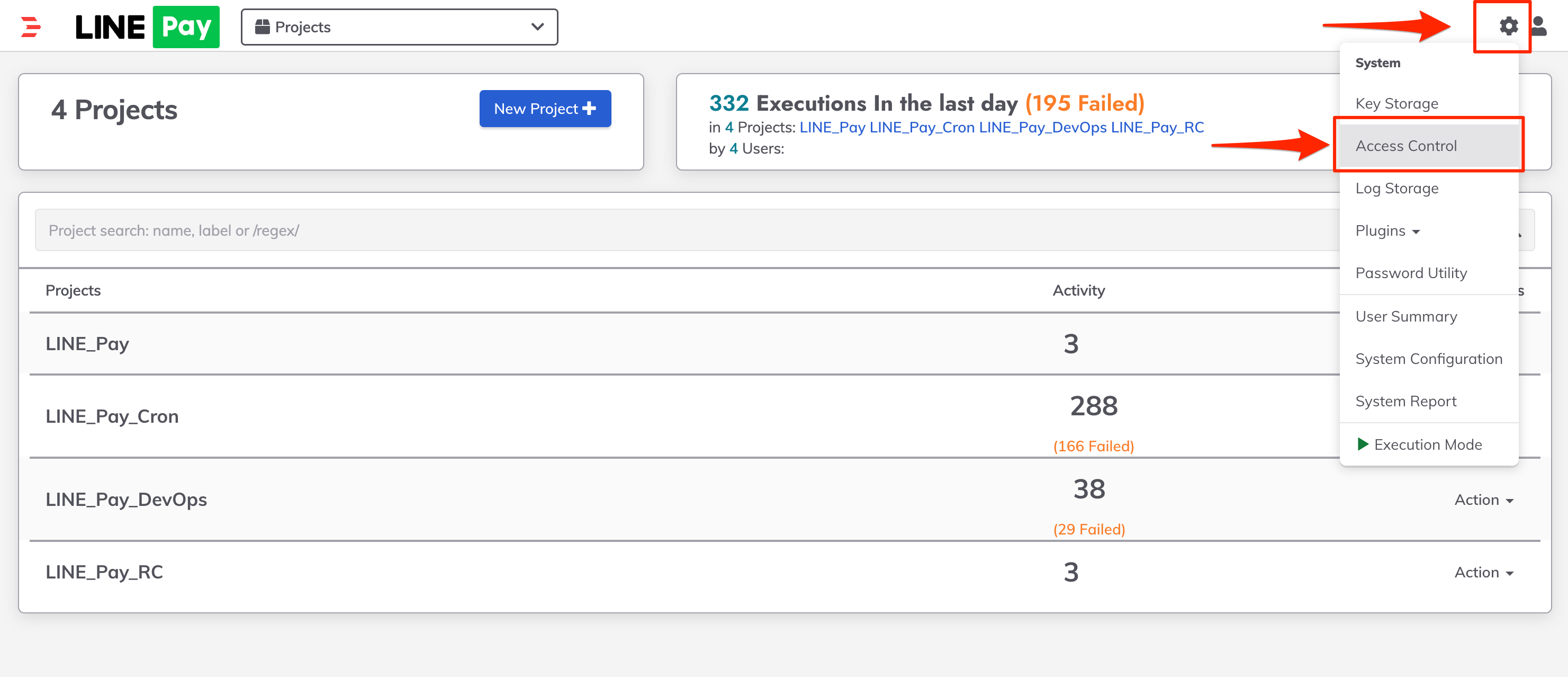

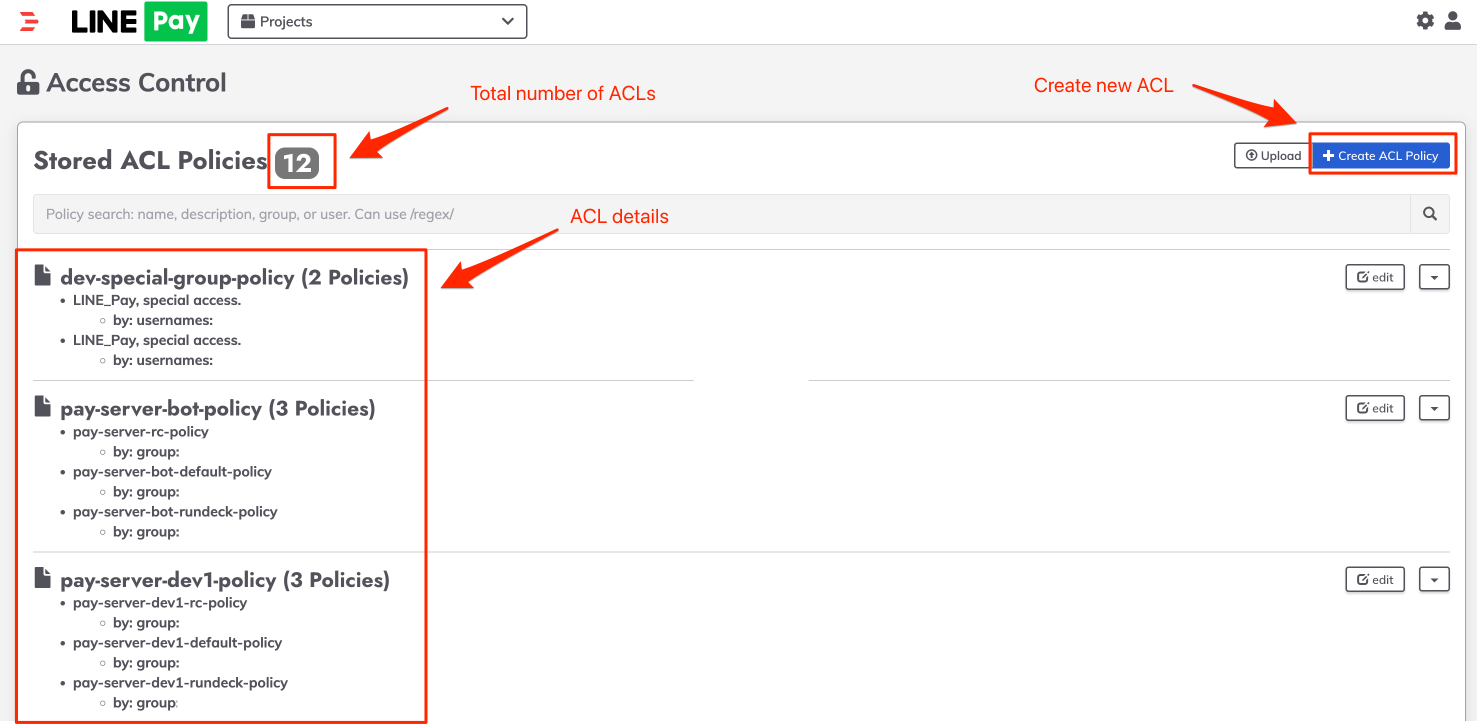

Like this, Rundeck's ACL has a high degree of freedom to define most situations in YAML format. Also, you can easily proceed with the settings as follows.

First, select Access Control from the Rundeck main screen. To set the ACL, you must be logged in as a user with Admin or higher permissions.

On the ACL page, you can check the total number of defined policies and all information, and if you select Create ACL Policy, you can define a new ACL policy.

Detailed policies can be defined in YAML format.

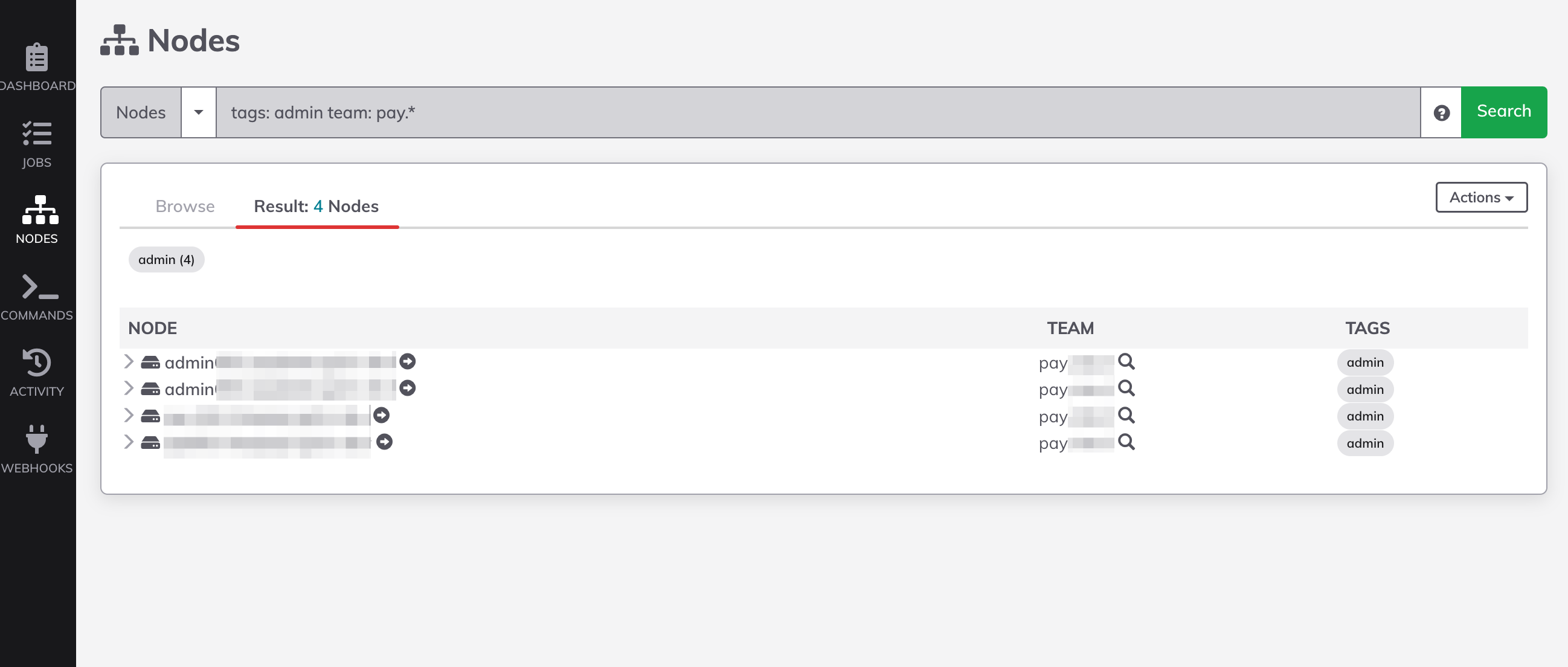

Restrict access only to servers managed by the user

LINE Pay uses thousands of servers to operate its services. If everyone can view all servers and execute tasks, it can become a security vulnerability, so we needed a way to limit users to only view or execute servers they are responsible for depending on their role. Since Rundeck provides powerful group-based access control features, we thought we could easily solve it with simple operations, but the problem was that there were too many services and servers managed by LINE Pay.

To introduce the Rundeck structure very simply, there's a NODES tab that manages the list of nodes, and there's a concept of a "node executor" that performs the desired function on the target node. Rundeck is an open-source platform that automates, organizes, and executes tasks. It can increase security levels and can be linked with external tools for task automation using various plugins. We used Ansible functions for configuring and performing functions on nodes.

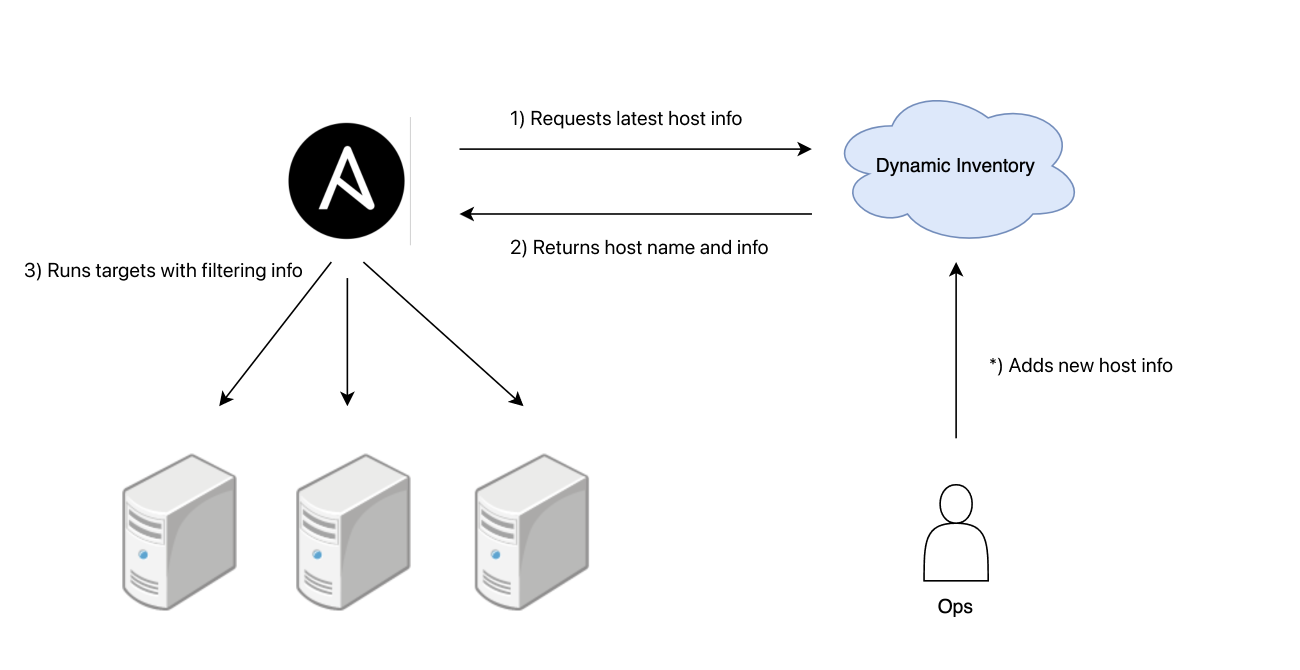

Initially, when Rundeck queried nodes, we applied the static inventory method commonly used in Ansible. The method of recording the target host in a file called hosts.ini. As LINE Pay has too many servers to operate, it was difficult to respond easily when changes occurred, and above all, this method was difficult to automate, so we switched to the dynamic inventory method.

The dynamic inventory method is a function that can dynamically fetch the host list to be used in Ansible, and it's a method of writing a script in Python. If you switch from a static method to a dynamic method, you can get the following four advantages.

- Flexible management: If you implement an inventory repository yourself, you can easily add or delete hosts through API.

- Inventory automation: It's easy to manage because targets are automatically changed without changing the repositories of Rundeck or Ansible.

- Enhanced security: Since hosts are not managed statically in a specific file but stored in a separate service, inventory information can only be viewed in a limited environment.

- Smooth inventory integrated management: Depending on the situation, you may need to manage targets using various services such as AWS, OpenStack, and Kubernetes. Ansible provides dynamic inventory plugins for various services.

We configured a separate dynamic inventory service that stores various host information as standardized data, and stored the server list used in LINE Pay and the tags with meanings together. In Ansible, instead of hosts.ini, we used a Python script to convert the information responded from the dynamic inventory server into YAML-based Rundeck host information. While keeping the information of the person in charge and the team, it was exposed to various attributes in Rundeck, and by using the access control function of Rundeck, it was applied so that only the servers managed by the logged-in user can be viewed, and the introduction goal could be achieved.

The overall structure can be represented as follows.

The dynamic inventory server is implemented as an API server that can register, query, delete, and update the server and server metadata that are targets of Ansible. The admin can manipulate server information through the API as follows.

//Server registration

POST localhost:8080/v1/server?componentName=admin

content-type: application/json

{

"hostName": "admin-server-01",

"env": "beta",

"tags": {

"component": "admin",

"team": "pay-dev"

},

"labels": {

"healthUrl": "http://foobar.linecorp.com/status"

}

}

//Server query

GET localhost:8080/v1/server?query=componentName:admin-server-01

//Server deletion

DELETE localhost:8080/v1/server?hostName=admin-server-01Ansible stores scripts in the inventory to query server information via API instead of hosts.ini.

# Written in pseudocode

# see: https://docs.ansible.com/ansible/latest/inventory_guide/intro_inventory.html

def get_inventory_list(env):

# Environment information verification

_check(env)

# Query host information by environment

# API response example [{"hostname": "linepay_host001", "ip": "0.0.0.0", info: {...}}]

host_list = _get_host_list(env)

# Create response object

dict_data = {}

dict_data["_meta"] = {}

dict_data["_meta"]["hostvars"] = _convert_to_ansible_way(host_list)

return dict_dataIf the admin registers the admin component on the dynamic inventory server as in the example above, the server information will be output when searched with the following formula in the NODES tab of Rundeck.

tags: admin team: pay-dev

Difficulties encountered in separating development and operation environments

As mentioned in the introduction, the main reason for automating the LINE Pay service environment was to improve the management method and establish a system to create a safer operating environment. To achieve this, we separated the development environment and operation environment, greatly limiting the developer's access to the service operation environment server. Since we previously allowed developers to access the operation environment for various purposes, restricting their access through this work meant that the admin, who had access rights to the entire infrastructure, had to handle the operation environment service work. This inevitably led to many trials, errors, and difficulties.

The admin who took over the work inevitably had difficulty being aware of the past service change history, making it easy to overlook points that could lead to failure in the operation environment. This could be a factor that could cause various failures. In addition, tasks like changing middleware settings such as Tomcat and NGINX are realistically difficult to properly verify before applying to the operating environment. Even if it's possible, there are many restrictions, so it was almost impossible for the admin to secure a guarantee against failure and proceed with all tasks.

Also, while we separated the development and operation environments to ultimately improve the reliability of the service, there are cases where a limited number of people have to operate complex and numerous domains for business reasons. In such cases, it's important to recognize the realistic limits that can occur and to prepare measures to overcome them in order to safely achieve the original goal. For this, we considered ways to increase the reliability of operation work.

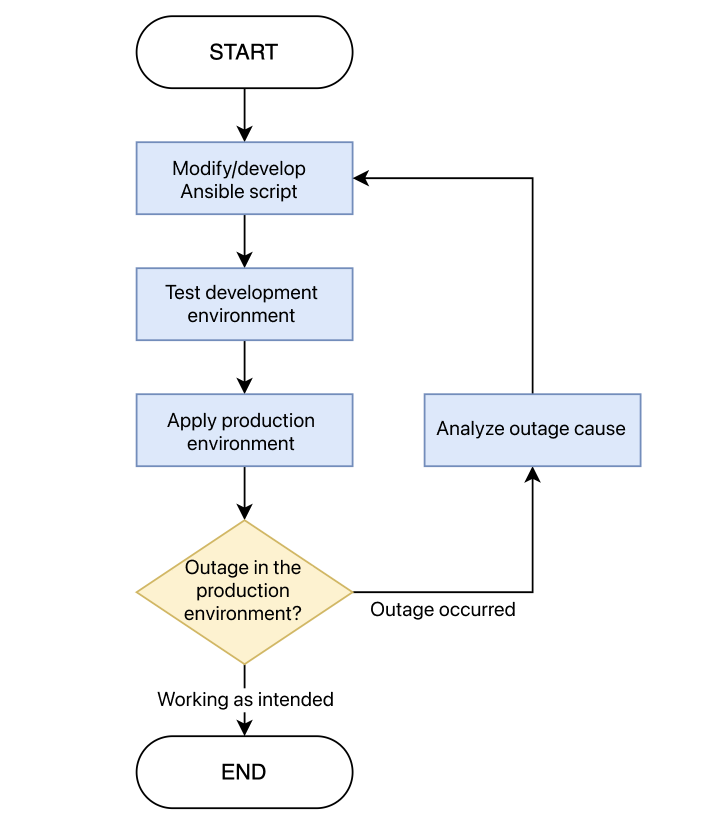

Increasing the reliability of operation work

In almost all engineering environments, testing is treated as a very important area, not just development. In the software industry, the importance of testing has grown to the point where various forms of development methodologies reflecting this trend have emerged. In line with this, various attempts are being made in the area of testing and automation in infrastructure, but it's not easy to apply even a more developed structure due to the high risk and cost of change in infrastructure.

Especially in the operation environment, when an emergency situation occurs, the top priority is to return to normal service. Therefore, it's more important to solve and supplement the problem each time, rather than improving the basis. Many cases consider it sufficient to provide and maintain a level where simple function tests can be performed even in the development environment. Therefore, the infrastructure configuration can greatly differ between the operation and development environments, even if they are in charge of the same business domain. Over time, this difference grows, and at some point, testing infrastructure changes in the development environment becomes a meaningless task.

Therefore, it was important to secure an environment with a minimum level of reliability that can conduct tests by making the server infrastructure configuration of the operation environment as similar as possible to the development environment. Of course, even after such tests, unexpected problems may occur in the operation environment. But when responding to various failures, the most important thing is to prevent the same failure from happening again. We judged that the best way to ensure this is to prepare a systematized system that can always guarantee the same result, rather than training one engineer as an expert.

To achieve this goal, it was necessary to standardize the process of accurately defining the state when everything is normal so that the same operation and results can always be guaranteed. For this, we defined a new work method and systematized the server work process to prevent the same failure from occurring by introducing Ansible, a configuration automation tool, and Rundeck, which can conveniently use Ansible.

- Develop Ansible script

- Test the Ansible script to be run for a specific server task in the beta environment

- Apply Ansible script to production environment

- If successful -> End

- If failed -> Analyze the cause

- Improve Ansible script if necessary depending on the type of failure

Operation environment application and results

At LINE Pay, we created various automated tasks using Rundeck and Ansible as follows.

- Installation of various applications (JDK, Node, Tomcat, NGINX, OpenResty, Exporter)

- Application setting update (NGINX setting, Tomcat setting, dump extraction)

- Application manipulation (dump extraction, manipulation of whether to put service server load balancer, daemon process manipulation)

- Other information processing (ACL check, Crontab setting, certificate replacement)

Through this, developers no longer have to rely on personal memory when performing operational tasks. You can manage history and operation task flow in a codified way, and anyone can carry out operation tasks to produce the same results, regardless of personal experience.

Now, whether it's a server existing in a physical or virtual environment, there's no need to log in every time to check the status of application installation or configuration settings. You can check the current status based on the information recorded in the server information YAML file, and if necessary, you can change the file information and execute a general task to safely achieve the goal.

After applying this to the operation environment for about 9 months, we were able to replace about 1,600 tasks with automation, as shown below.

| Automation item | Person in charge | Number of executions |

|---|---|---|

| Installation of various applications | Admin | 56 |

| Application setting updates | Admin | 93 |

| Certificate replacement | Admin | 51 |

| Server information inquiry - System usage and file inquiry | User | 989 |

| Network information inquiry - ACL and traceroute | User | 428 |

Automation not only eliminated the risk of directly accessing the server to install something or check simple tasks, but it also saved time spent on manual tasks, increasing productivity.

Moreover, all these changes were managed through Git, requiring the review of both the development and operation teams whenever changes occurred. This double-check process ensured that changes could only be reflected in the operation environment after approval. It made the operation environment more robust and created a culture where if a failure occurs, it's everyone's responsibility, not just an individual's. This allowed us to maintain efficiency and reliability while creating a constructive operation environment.

In conclusion

The LINE Pay service has experienced explosive growth over about 10 years since its launch. As various services developed rapidly at the same time, we realized the need for advanced management technology to support both functional and quantitative growth.

We coded and systematized complex operational resources used for various purposes to enhance the stability of tasks. When setting up new servers, we made it possible to install with the same configuration by just defining the information matching the server to the service role, ensuring flexibility. We implemented a series of tasks that the operator manually performed using Ansible and provided execution rights only to authorized users on the Rundeck dashboard, simplifying the process.

I hope this article is helpful to DevOps teams and developers looking for safer and more efficient ways to operate systems. Thank you for reading this long article.